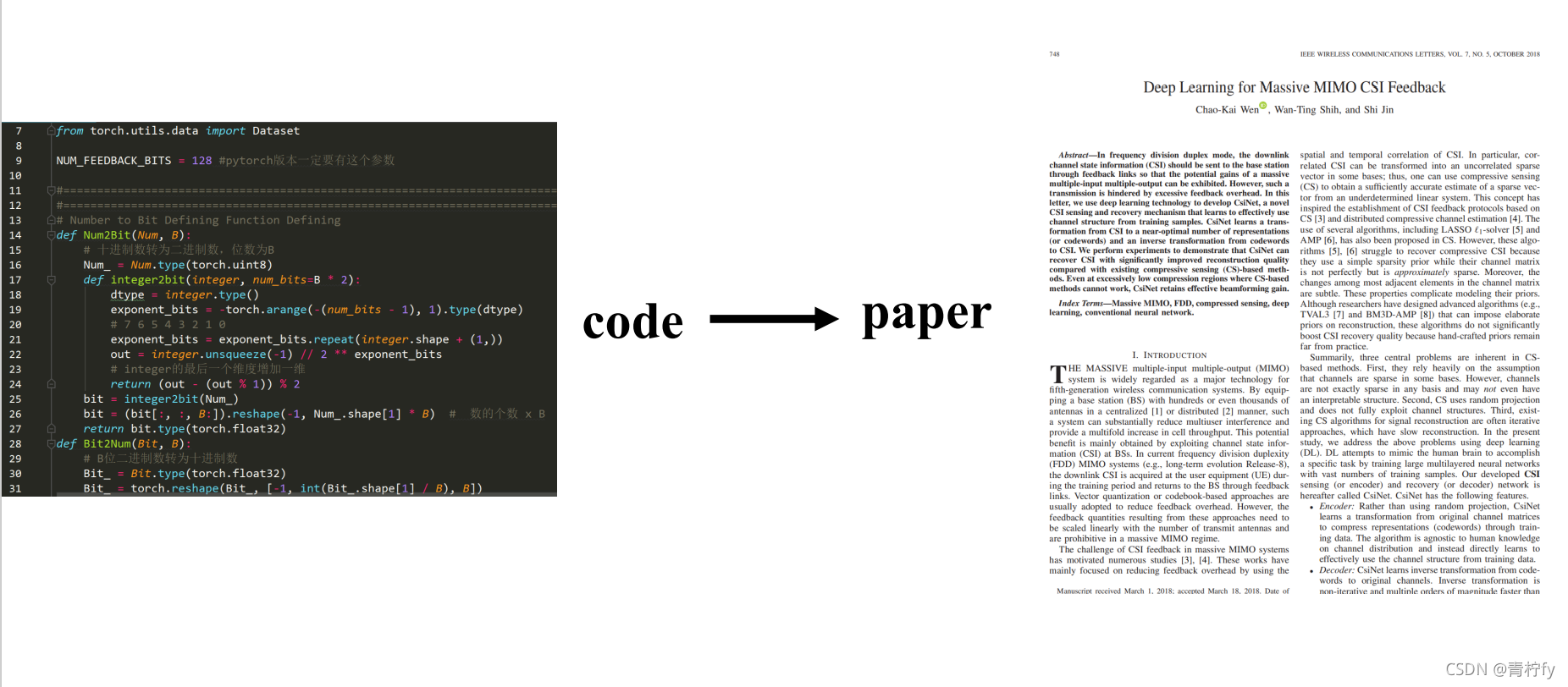

这篇文章是自己之前学习论文的一点心得,是源于AI+无线通信这个比赛。

论文百度搜这个,去IEEE官网就可以下载了。【C. Wen, W. Shih and S. Jin, “Deep Learning for Massive MIMO CSI Feedback”, in IEEE Wireless Communications Letters, vol. 7, no. 5, pp. 748-751, Oct. 2018, doi: 10.1109/LWC.2018.2818160.】

那我们正式开始!

首先拿到的是比赛官网给的示例代码,直接看可能会摸不着头脑,我们就从code去寻找paper

文章不是很长,我们逐段分析!

代码分为Torch版本和TensorFlow版本,如下:

Torch版本代码没问题,TensorFlow版本好像有点问题,本人也是初学者,很久没有解决。

# TensorFlow版本如下

# modelDesign 模块

"""

Note:

1.This file is used for designing the structure of encoder and decoder.

2.The neural network structure in this model file is CsiNet, more details about CsiNet can be found in [1].

[1] C. Wen, W. Shih and S. Jin, "Deep Learning for Massive MIMO CSI Feedback", in IEEE Wireless Communications Letters, vol. 7, no. 5, pp. 748-751, Oct. 2018, doi: 10.1109/LWC.2018.2818160.

3.The output of the encoder must be the bitstream.

"""

#=======================================================================================================================

import numpy as np

import tensorflow as tf

from tensorflow import keras

from tensorflow.keras import layers

#=======================================================================================================================

# Number to Bit Defining Function Defining

def Num2Bit(Num, B):

# 数字转为比特 B是位数,即十进制数转成B位的二进制数

Num_ = Num.numpy()

bit = (np.unpackbits(np.array(Num_, np.uint8), axis=1).reshape(-1, Num_.shape[1], 8)[:, :, (8-B):]).reshape(-1,Num_.shape[1] * B)

# 直接调函数转np.array(Num_, np.uint8)8位

bit.astype(np.float32)

return tf.convert_to_tensor(bit, dtype=tf.float32)

# Bit to Number Function Defining

def Bit2Num(Bit, B):

# 比特转为数字 B是位数,即B位的二进制转成十进制

Bit_ = Bit.numpy()

Bit_.astype(np.float32)

Bit_ = np.reshape(Bit_, [-1, int(Bit_.shape[1] / B), B])

num = np.zeros(shape=np.shape(Bit_[:, :, 1]))

for i in range(B):

num = num + Bit_[:, :, i] * 2 ** (B - 1 - i)

return tf.cast(num, dtype=tf.float32)

#=======================================================================================================================

#=======================================================================================================================

# Quantization and Dequantization Layers Defining

@tf.custom_gradient # 修饰函数

def QuantizationOp(x, B):

# 量化操作函数 均匀量化,四舍五入,位数为B

step = tf.cast((2 ** B), dtype=tf.float32)

result = tf.cast((tf.round(x * step - 0.5)), dtype=tf.float32)

result = tf.py_function(func=Num2Bit, inp=[result, B], Tout=tf.float32)

e = tf.exp(x)

def custom_grad(dy):

# grad = dy * (1 - 1 / (1 + e))

grad = dy

# grad = dy

return (grad, grad)

# num = tf.size(result)[0]

# result.set_shape((int(num/128),128))

# result = tf.reshape(result, (-1, 128))

return result, custom_grad

class QuantizationLayer(tf.keras.layers.Layer):

def __init__(self, B,**kwargs):

self.B = B

super(QuantizationLayer, self).__init__()

def call(self, x):

# 调用量化操作

return QuantizationOp(x, self.B)

def get_config(self):

# 得到配置

# Implement get_config to enable serialization. This is optional.

base_config = super(QuantizationLayer, self).get_config()

base_config['B'] = self.B

return base_config

@tf.custom_gradient

def DequantizationOp(x, B):

# 解量化操作 位数为B

x = tf.py_function(func=Bit2Num, inp=[x, B], Tout=tf.float32)

step = tf.cast((2 ** B), dtype=tf.float32)

result = tf.cast((x + 0.5) / step, dtype=tf.float32)

# 除以step是归一化

# e = tf.exp(x)

def custom_grad(dy):

# grad = dy * (1 - 1 / (1 + e))

grad = dy

return (grad, grad)

# result = tf.reshape(result, (-1, 128))

return result, custom_grad

class DeuantizationLayer(tf.keras.layers.Layer):

# 解量化层,位数为B

def __init__(self, B,**kwargs):

self.B = B

super(DeuantizationLayer, self).__init__()

def call(self, x):

# 调用解量化操作

return DequantizationOp(x, self.B)

def get_config(self):

base_config = super(DeuantizationLayer, self).get_config()

base_config['B'] = self.B

return base_config

#=======================================================================================================================

#=======================================================================================================================

# Encoder and Decoder Function Defining

def Encoder(enc_input,num_feedback_bits):

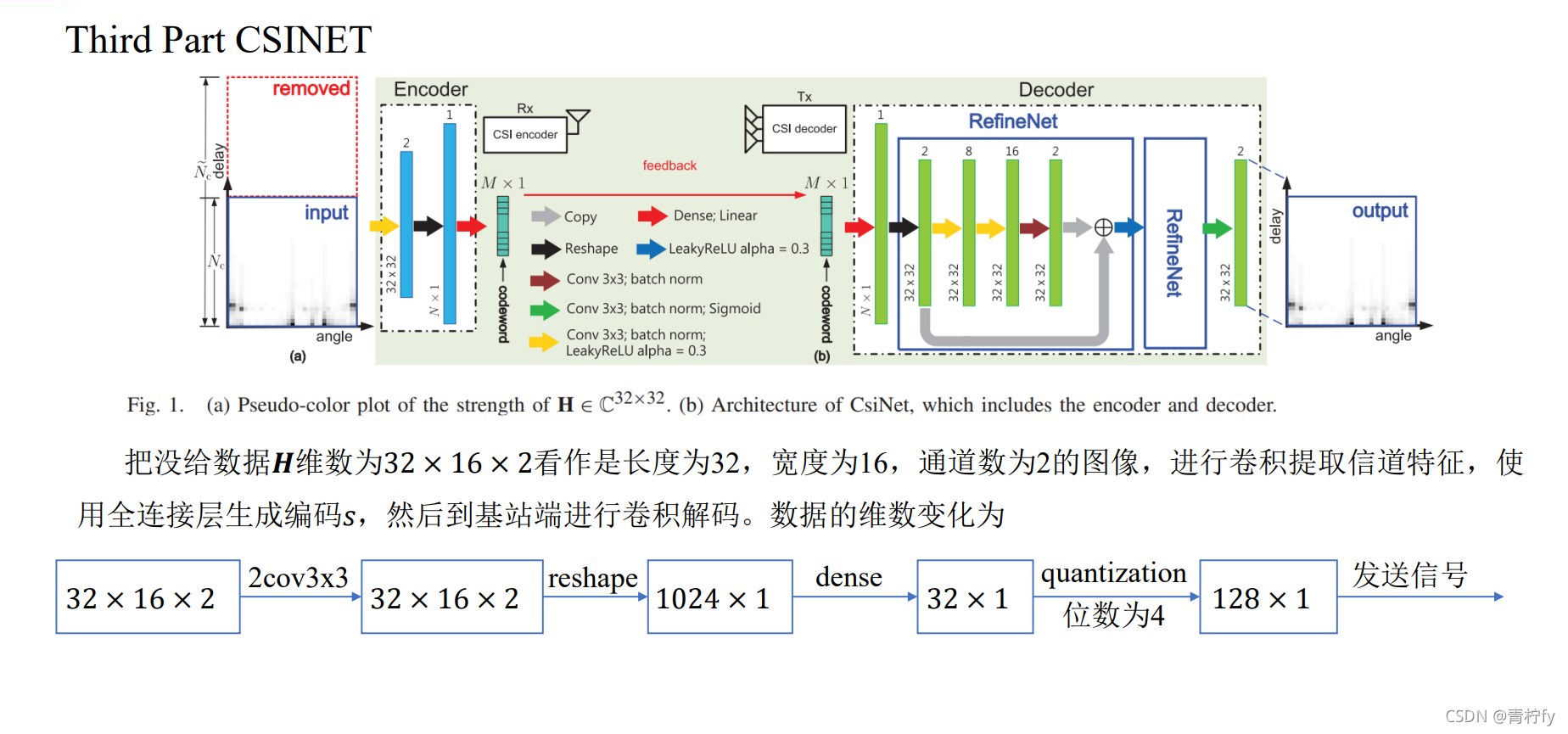

num_quan_bits = 4 # 量化位数

def add_common_layers(y):

y = keras.layers.BatchNormalization()(y) # 批量归一化

y = keras.layers.LeakyReLU()(y)

return y

# Leaky ReLUs

# ReLU是将所有的负值都设为零,相反,Leaky ReLU是给所有负值赋予一个非零斜率。

# 以数学的方式我们可以表示为:

# yi = xi if xi>=0

# yi = xi/ai if xi<0

# ai是(1,+∞)区间内的固定参数。

h = layers.Conv2D(3, (3, 3), padding='SAME', data_format='channels_last')(enc_input)

# 通道数(卷积核数)为3,3x3卷积核卷积,padding zeros 保持矩阵大小不变,data_format 数据格式最后一个数为通道数

# 生成三个特征层

h = add_common_layers(h) # 批量归一化 + 激活函数leakyRULU激活

h = layers.Flatten()(h) # 把矩阵展为向量

h = layers.Dense(1024, activation='sigmoid')(h)

# 实现神经网络里的全连接层

# 将h的最后一维改成1024,并且使用'sigmoid'函数激活

h = layers.Dense(units=int(num_feedback_bits / num_quan_bits), activation='sigmoid')(h)

# 将h的最后一维改成 反馈信号最大容量比特数/量化位数 ,并且使用'sigmoid'函数激活

enc_output = QuantizationLayer(num_quan_bits)(h)

# 将h量化 位数为4 得到编码输出 数据总量=num_feedback_bits

return enc_output

def Decoder(dec_input,num_feedback_bits):

num_quan_bits = 4 # 量化位数4

def add_common_layers(y):

y = keras.layers.BatchNormalization()(y) # 批量归一化

y = keras.layers.LeakyReLU()(y) # 激活函数leakyRELU

return y

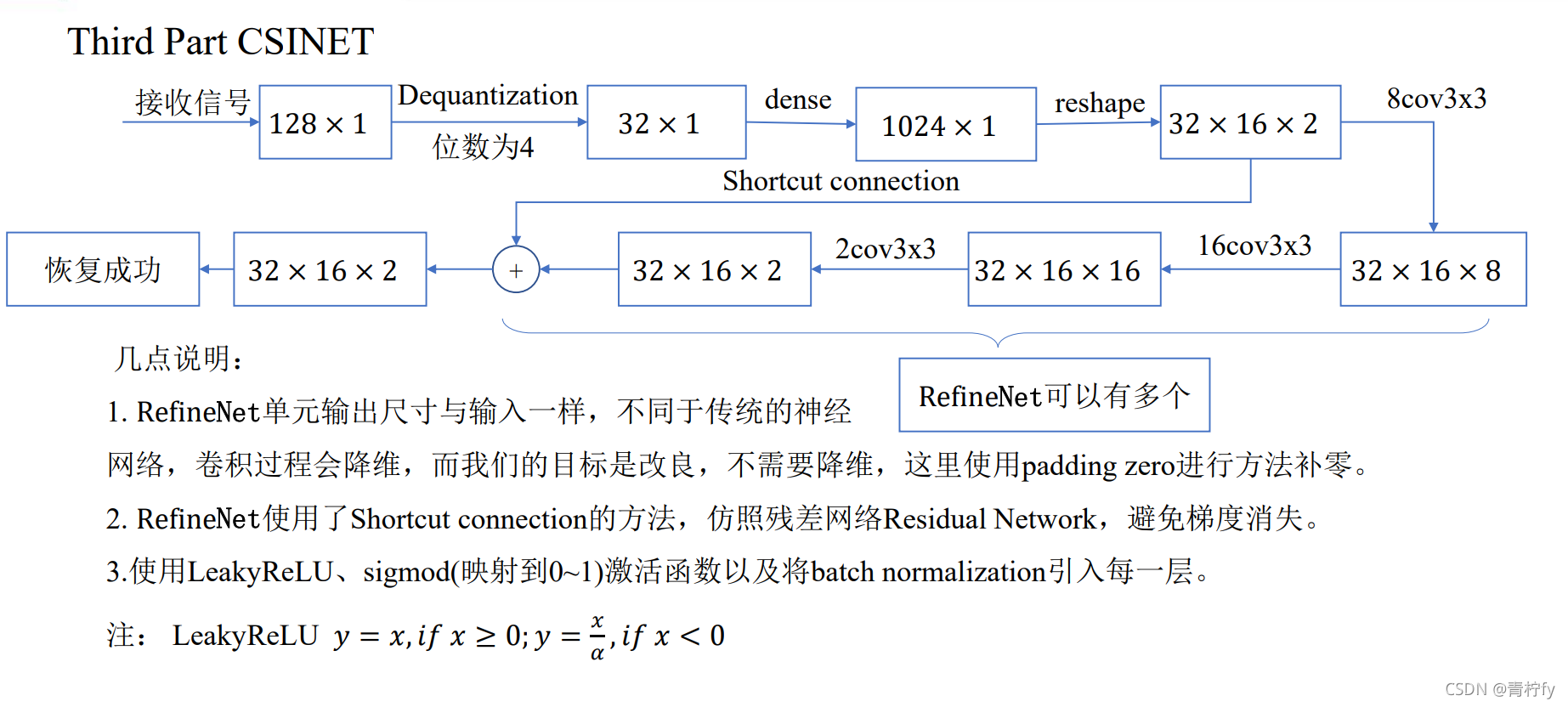

h = DeuantizationLayer(num_quan_bits)(dec_input) # 解量化,量化的位数为4

# h = tf.keras.layers.Reshape((-1, int(num_feedback_bits/num_quan_bits)))(h)

h = tf.keras.layers.Reshape((-1,int(num_feedback_bits / num_quan_bits)))(h)

# 一个数是4位,总传输的比特数 / 4 = 传输的十进制数的个数,-1的含义是自动计算列数,结果应该是1,这是一个列向量

h = layers.Dense(1024, activation='sigmoid')(h)

# 使用全连接层,将列向量维度拉伸为1024,并使用激活函数'sigmoid'

h = layers.Reshape((32, 16, 2))(h)

# 将1024x1的向量,reshape成32x16x2的矩阵,相当于有两个特征层,每一层是32x16

res_h = h

# 保留32x16x2的矩阵,之后进行shortcut connecttion操作,残差网络,防止梯度消失

#===========================================================================

h = layers.Conv2D(8, (3, 3), padding='SAME', data_format='channels_last')(h)

# 通道数(卷积核数)为3,3x3卷积核卷积,padding zeros 保持矩阵大小不变,data_format 数据格式最后一个数为通道数

# 生成三个特征层

h = keras.layers.LeakyReLU()(h)

h = layers.Conv2D(16, (3, 3), padding='SAME', data_format='channels_last')(h)

# 通道数(卷积核数)为3,3x3卷积核卷积,padding zeros 保持矩阵大小不变,data_format 数据格式最后一个数为通道数

# 生成三个特征层

h = keras.layers.LeakyReLU()(h)

# leakyReLU 激活

# ==================================================================================

# h = layers.Conv2D(3, (3, 3), padding='SAME', data_format='channels_last')(h)

# h = keras.layers.LeakyReLU()(h)

# for i in range(1):

# x = layers.Conv2D(3, kernel_size=(3, 3), padding='same', data_format='channels_last')(h)

# x = add_common_layers(x)

# 进行3个卷积核,卷积核尺寸为3x3的卷积操作,padding补0,保持矩阵大小不变,卷积完批量归一化,以及激活函数leakyRELU

# ===========================================================================

h = layers.Conv2D(2, kernel_size=(3, 3), padding='same', data_format='channels_last')(h)

# 2卷积核,卷积核尺寸为3x3的卷积操作,padding补0,保持矩阵大小不变,此时大小应该为32x16x2

dec_output = keras.layers.Add()([res_h, h])

# 与之前的h相加,残差网络,防止梯度消失

return dec_output

#=======================================================================================================================

#=======================================================================================================================

# NMSE Function Defining

def NMSE(x, x_hat):

# 计算NMSE均方误差,x为实际值,x_hat为估计值

x_real = np.reshape(x[:, :, :, 0], (len(x), -1))

# 取x的实部层 len(x)是返回矩阵长度,不是元素个数

x_imag = np.reshape(x[:, :, :, 1], (len(x), -1))

# 取x的虚部层

x_hat_real = np.reshape(x_hat[:, :, :, 0], (len(x_hat), -1))

x_hat_imag = np.reshape(x_hat[:, :, :, 1], (len(x_hat), -1))

x_C = x_real - 0.5 + 1j * (x_imag - 0.5)

# 实部虚部同时减0.5是将(0,1)的数搬移至(-0.5,0.5),使其中心为0

x_hat_C = x_hat_real - 0.5 + 1j * (x_hat_imag - 0.5)

power = np.sum(abs(x_C) ** 2, axis=1)

# axis= 1行方向相加

mse = np.sum(abs(x_C - x_hat_C) ** 2, axis=1)

nmse = np.mean(mse / power)

return nmse

def get_custom_objects():

return {"QuantizationLayer":QuantizationLayer,"DeuantizationLayer":DeuantizationLayer}

# 得到量化层和得到解量化层

# modeltrain 模块

#==================================================================================

import numpy as np

from tensorflow import keras

from modelDesign import Encoder, Decoder, NMSE#*

import scipy.io as sio

#==================================================================================

# Parameters Setting

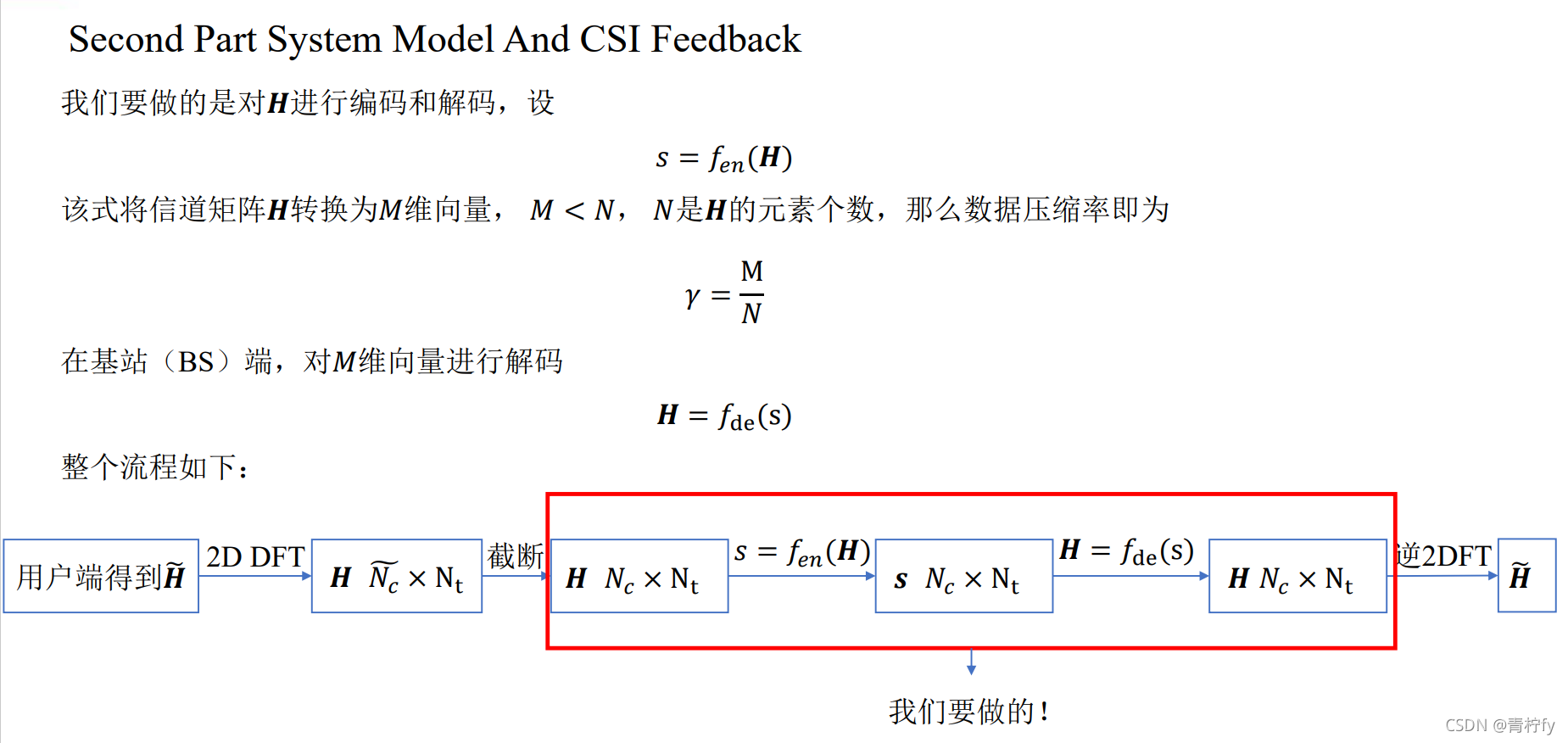

NUM_FEEDBACK_BITS = 128 # 反馈比特数为128 = M 压缩率为128/1024=0.125 即1/8

CHANNEL_SHAPE_DIM1 = 32 # 32x16x2=1024 N = 1024

CHANNEL_SHAPE_DIM2 = 16

CHANNEL_SHAPE_DIM3 = 2

#=======================================================================================================================

import h5py

mat = h5py.File('./channelData/Hdata.mat',"r")

data = np.transpose(mat['H_train']) # shape=(320000, 1024)

# Data Loading

# mat = sio.loadmat('./channelData/Hdata.mat') # 读取信道数据

# data = mat

# data = mat['Hdata.mat']

data = data.astype('float32')

data = np.reshape(data, (len(data), CHANNEL_SHAPE_DIM1, CHANNEL_SHAPE_DIM2, CHANNEL_SHAPE_DIM3))

# print(np.size(data,1))

# 重新改变数据的维数 reshape

#=======================================================================================================================

# Model Constructing

# Encoder

encInput = keras.Input(shape=(CHANNEL_SHAPE_DIM1, CHANNEL_SHAPE_DIM2, CHANNEL_SHAPE_DIM3))

# 设置编码输入

encOutput = Encoder(encInput, NUM_FEEDBACK_BITS)

# 进行编码,反馈数据为512个bit

encModel = keras.Model(inputs=encInput, outputs=encOutput, name='Encoder')

print(encModel.summary())

# 设置编码模型,对应输入和输出l

# Decoder

decInput = keras.Input(shape=(NUM_FEEDBACK_BITS,))

# 设置解码输入,输入为512个bit数

decOutput = Decoder(decInput, NUM_FEEDBACK_BITS)

# 进行解码

decModel = keras.Model(inputs=decInput, outputs=decOutput, name="Decoder")

print(decModel.summary())

# 设置解码模型,对应输入和输出

# Autoencoder 设置自动编码器模型

autoencoderInput = keras.Input(shape=(CHANNEL_SHAPE_DIM1, CHANNEL_SHAPE_DIM2, CHANNEL_SHAPE_DIM3))

# 设置自动编码器模型的输入

autoencoderOutput = decModel(encModel(autoencoderInput))

# 进行自动编码,即先编码再解码

autoencoderModel = keras.Model(inputs=autoencoderInput, outputs=autoencoderOutput, name='Autoencoder')

# 设置自动编码模型,对应输入和输出

# Comliling

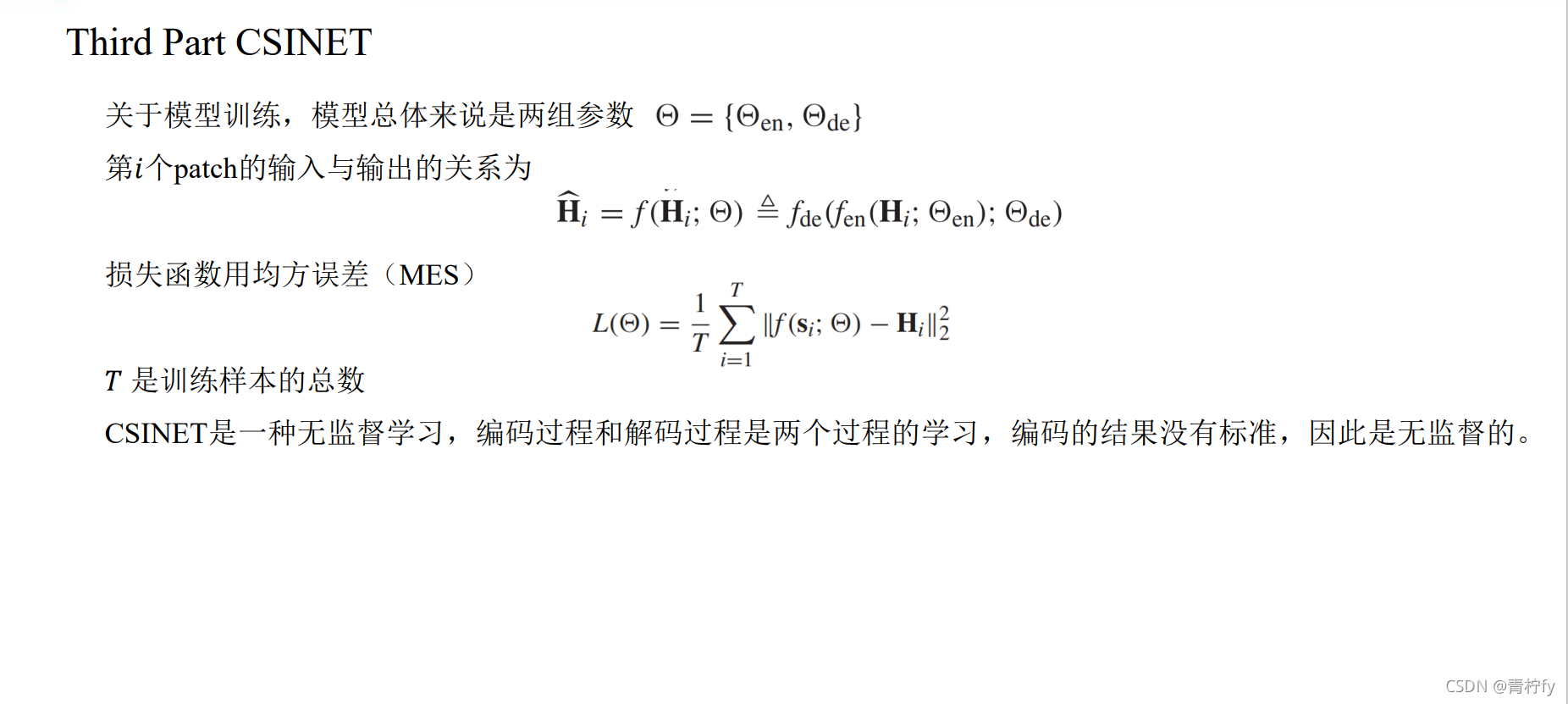

autoencoderModel.compile(optimizer='adam', loss='mse')

# 配置训练函数,优化器选用'adam',损失函数选用mse即均方误差

print(autoencoderModel.summary())

# 输出模型各层的参数状况

#==================================================================================

# Model Training 开始训练模型

autoencoderModel.fit(x=data, y=data, batch_size=16, epochs=2, verbose=1, validation_split=0.05)

# 模型拟合 批量数为64 代数为2 verbose = 1 为输出进度条记录 95%训练 5%检验

#==================================================================================

# Model Saving

# Encoder Saving

encModel.save('./modelSubmit/encoder.h5')

# Decoder Saving

decModel.save('./modelSubmit/decoder.h5')

#==================================================================================

# Model Testing

H_test = data

H_pre = autoencoderModel.predict(H_test, batch_size=512) # 之前为512

print('NMSE = ' + np.str(NMSE(H_test, H_pre)))

print('Training finished!')

#==================================================================================

# modelEvalEnc.py

#==================================================================================

import numpy as np

import tensorflow as tf

from modelDesign import *

import scipy.io as sio

#==================================================================================

# Parameters Setting

NUM_FEEDBACK_BITS = 128

CHANNEL_SHAPE_DIM1 = 32

CHANNEL_SHAPE_DIM2 = 16

CHANNEL_SHAPE_DIM3 = 2

#==================================================================================

# Data Loading

import h5py

# MATLAB v7版本,之前那个函数无法读数据

data = h5py.File('./channelData/Hdata.mat')['H_train'].value

data = data.astype('float32')

data = np.reshape(data, (len(data), CHANNEL_SHAPE_DIM1, CHANNEL_SHAPE_DIM2, CHANNEL_SHAPE_DIM3))

H_test = data # 原始的data作为测试集

#==================================================================================

# Model Loading and Encoding

# 模型加载和编码

encoder_address = './modelSubmit/encoder.h5'

_custom_objects = get_custom_objects()

# 以字典形式来指定目标层layer或目标函数loss,相当于目标层是量化之后

encModel = tf.keras.models.load_model(encoder_address, custom_objects=_custom_objects)

# 加载模型,即模型从编码到量化完成这部分

encode_feature = encModel.predict(H_test)

# 使用模型进行训练

print("Feedback bits length is ", np.shape(encode_feature)[-1])

# 看输出是不是512维的向量

np.save('./encOutput.npy', encode_feature)

# 以npy格式保存Feedback bits,即编码量化后的数据

print('Finished!')

#==================================================================================

# modelEvalDec.py

#==================================================================================

import numpy as np

import tensorflow as tf

from modelDesign import *

import scipy.io as sio

#==================================================================================

# Parameters Setting

NUM_FEEDBACK_BITS = 128

CHANNEL_SHAPE_DIM1 = 32

CHANNEL_SHAPE_DIM2 = 16

CHANNEL_SHAPE_DIM3 = 2

#==================================================================================

# Data Loading

import h5py

data = h5py.File('./channelData/Hdata.mat')['H_train'].value

data = data.astype('float32')

data = np.reshape(data, (len(data), CHANNEL_SHAPE_DIM1, CHANNEL_SHAPE_DIM2, CHANNEL_SHAPE_DIM3))

H_test = data

# encOutput Loading

encode_feature = np.load('./encOutput.npy')

# 加载编码后的数据

#==================================================================================

# Model Loading and Decoding

decoder_address = './modelSubmit/decoder.h5'

_custom_objects = get_custom_objects()

# 以字典形式来指定目标层layer或目标函数loss,相当于模型是从解量化开始

model_decoder = tf.keras.models.load_model(decoder_address, custom_objects=_custom_objects)

# 加载模型,即模型从解量化到解码完成这部分

H_pre = model_decoder.predict(encode_feature)

# 用模型进行解码

# NMSE 求解均方误差

if (NMSE(H_test, H_pre) < 0.1):

print('Valid Submission')

print('The Score is ' + np.str(1.0 - NMSE(H_test, H_pre)))

print('Finished!')

#==================================================================================

# Torch版本如下

# modelDesign.py

#=======================================================================================================================

#=======================================================================================================================

import numpy as np

import torch.nn as nn

import torch

import torch.nn.functional as F

from torch.utils.data import Dataset

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

NUM_FEEDBACK_BITS = 128 #pytorch版本一定要有这个参数

CHANNEL_SHAPE_DIM1 = 16 # Nc

CHANNEL_SHAPE_DIM2 = 32 # Nt 发射天线数

CHANNEL_SHAPE_DIM3 = 2 # 实部虚部

BATCH_SIZE = 128 # 原始为128

#=======================================================================================================================

#=======================================================================================================================

# Number to Bit Defining Function Defining

def Num2Bit(Num, B):

# 十进制数转为二进制数,位数为B

Num_ = Num.type(torch.uint8)

def integer2bit(integer, num_bits=B * 2):

dtype = integer.type()

exponent_bits = -torch.arange(-(num_bits - 1), 1).type(dtype)

# 7 6 5 4 3 2 1 0

exponent_bits = exponent_bits.repeat(integer.shape + (1,))

out = integer.unsqueeze(-1) // 2 ** exponent_bits

# integer的最后一个维度增加一维

# 整数除以2的幂次取商,商模2取余数

# 例如 整数5 5/1=5 5/2=2 5/4=1 5/8=0

# 5%2=1 2%2=0 1%2=1 0%2=0

# 倒序排列 0101 转为了二进制

return (out - (out % 1)) % 2

bit = integer2bit(Num_)

bit = (bit[:, :, B:]).reshape(-1, Num_.shape[1] * B) # 数的个数 x B

return bit.type(torch.float32)

def Bit2Num(Bit, B):

# B位二进制数转为十进制数

Bit_ = Bit.type(torch.float32)

Bit_ = torch.reshape(Bit_, [-1, int(Bit_.shape[1] / B), B])

num = torch.zeros(Bit_[:, :, 1].shape).cuda()

for i in range(B):

num = num + Bit_[:, :, i] * 2 ** (B - 1 - i)

# 0101 转为 十进制

# 1*1+0*2+1*4+0*8 = 0

return num

#=======================================================================================================================

#=======================================================================================================================

# Quantization and Dequantization Layers Defining

class Quantization(torch.autograd.Function):

# 创建torch.autograd.Function类的一个子类

# 必须是staticmethod

@staticmethod # 静态方法

# 第一个是ctx,第二个是input,其他是可选参数。

# ctx在这里类似self,ctx的属性可以在backward中调用,保存前向传播的变量。

# 自己定义的Function中的forward()方法,

# 所有的Variable参数将会转成tensor!因此这里的input也是tensor.在传入forward前,

# autograd engine会自动将Variable unpack成Tensor,张量

def forward(ctx, x, B):

ctx.constant = B

step = 2 ** B

out = torch.round(x * step - 0.5) # 四舍五入 0-1转为0-16 再减0.5 相当于向下取值

out = Num2Bit(out, B) # 转为二进制

return out

@staticmethod

def backward(ctx, grad_output):

# return as many input gradients as there were arguments.

# Gradients of constant arguments to forward must be None.

# Gradient of a number is the sum of its B bits.

b, _ = grad_output.shape

grad_num = torch.sum(grad_output.reshape(b, -1, ctx.constant), dim=2) / ctx.constant

return grad_num, None

class Dequantization(torch.autograd.Function):

# 解量化

@staticmethod

def forward(ctx, x, B):

ctx.constant = B

step = 2 ** B

out = Bit2Num(x, B) # 转为数字 0-16

out = (out + 0.5) / step # 补偿0.5,归一化到0-1

return out

@staticmethod

def backward(ctx, grad_output):

# return as many input gradients as there were arguments.

# Gradients of non-Tensor arguments to forward must be None.

# repeat the gradient of a Num for B time.

b, c = grad_output.shape

grad_output = grad_output.unsqueeze(2) / ctx.constant

grad_bit = grad_output.expand(b, c, ctx.constant)

return torch.reshape(grad_bit, (-1, c * ctx.constant)), None

class QuantizationLayer(nn.Module):

def __init__(self, B):

super(QuantizationLayer, self).__init__()

self.B = B

def forward(self, x):

out = Quantization.apply(x, self.B)

return out

class DequantizationLayer(nn.Module):

def __init__(self, B):

super(DequantizationLayer, self).__init__()

self.B = B

def forward(self, x):

out = Dequantization.apply(x, self.B)

return out

#=======================================================================================================================

#=======================================================================================================================

# Encoder and Decoder Class Defining

# 编码和解码

def conv3x3(in_channels, out_channels, stride=1):

# 定义3x3卷积,输入通道,输出通道,即有几个卷积核,步长为1

return nn.Conv2d(in_channels, out_channels, kernel_size=3, stride=stride, padding=1, bias=True)

# 卷积核为3x3 步长为1 padding使卷积后维数相同 加偏置bias

class Encoder(nn.Module):

num_quan_bits = 4 # 量化位数为4

def __init__(self, feedback_bits): # 反馈位数为128

super(Encoder, self).__init__()

self.conv1 = conv3x3(2, 2) # 定义输入为2通道输出为2通道的卷积

self.conv2 = conv3x3(2, 2) # 定义输入为2通道输出为2通道的卷积

self.fc = nn.Linear(1024, int(feedback_bits / self.num_quan_bits))

# 定义全连接网络,1024转为128/4=32

self.sig = nn.Sigmoid()

# 定义激活函数

self.quantize = QuantizationLayer(self.num_quan_bits)

# 定义量化函数

def forward(self, x):

x = x.permute(0, 3, 1, 2)

# 维数换位,将通道数换到前面

########################################

# 第一次

x = self.conv1(x) # 卷积

# x = nn.BatchNorm2d(x).to(device=device)

x = F.leaky_relu(x, negative_slope=0.3)

#########################################

# 第二次

x = self.conv2(x) # 卷积

# x = nn.BatchNorm2d(x).to(device=device)

out = F.leaky_relu(x, negative_slope=0.3)

#########################################

# out = F.relu() # 卷积 加 relu

# out = F.relu(self.conv2(out)) # 卷积 加 relu

out = out.contiguous().view(-1, 1024)

# 不改变数据维数,只是换一种索引方法,展成1024

# 需使用contiguous().view(),或者可修改为reshape

out = self.fc(out) # 调用全连接网络转为32

out = self.sig(out) # 调用SIGMOD函数激活

out = self.quantize(out) # 量化

return out

class Decoder(nn.Module):

num_quan_bits = 4 # 量化位数为4

def __init__(self, feedback_bits):

super(Decoder, self).__init__()

self.feedback_bits = feedback_bits # 定义反馈比特数为128

self.dequantize = DequantizationLayer(self.num_quan_bits) # 定义解量化操作

self.multiConvs = nn.ModuleList() # 多次卷积函数

self.fc = nn.Linear(int(feedback_bits / self.num_quan_bits), 1024)

# 定义从32到1024的全连接层

self.out_cov = conv3x3(2, 2) # 定义输入2通道 输出2通道的卷积

self.sig = nn.Sigmoid() # 定义sigmod

for _ in range(3):

self.multiConvs.append(nn.Sequential(

conv3x3(2, 8),

# nn.BatchNorm2d(num_features=BATCH_SIZE*8*CHANNEL_SHAPE_DIM1*CHANNEL_SHAPE_DIM2, affine=True),

nn.LeakyReLU(negative_slope=0.3),

conv3x3(8, 16),

# nn.BatchNorm2d(num_features=BATCH_SIZE*8*CHANNEL_SHAPE_DIM1*CHANNEL_SHAPE_DIM2, affine=True),

nn.LeakyReLU(negative_slope=0.3),

conv3x3(16, 2)))

# nn.BatchNorm2d(num_features=BATCH_SIZE*8*CHANNEL_SHAPE_DIM1*CHANNEL_SHAPE_DIM2, affine=True)))

# 进行三次卷积通道数 2->8->16->2

def forward(self, x):

out = self.dequantize(x) # 先解量化

out = out.contiguous().view(-1, int(self.feedback_bits / self.num_quan_bits))

# 转成32

# 需使用contiguous().view(),或者可修改为reshape

out = self.sig(self.fc(out))

# 32转1024

out = out.contiguous().view(-1, 2, 16, 32)

# 将通道提前到最前面

# 需使用contiguous().view(),或者可修改为reshape

#############################################

# 第一次refine net

residual = out

for i in range(3):

out = self.multiConvs[i](out)

out = residual + out

out = F.leaky_relu(out, negative_slope=0.3)

# 第二次refine net

residual = out

for i in range(3):

out = self.multiConvs[i](out)

out = residual + out

################################################

out = self.out_cov(out)

# out = F.batch_norm(out)

# 输入2 输出2 卷一次

out = self.sig(out)

# 归一化

out = out.permute(0, 2, 3, 1)

# 将通道换到最后一维

return out

class AutoEncoder(nn.Module):

def __init__(self, feedback_bits):

super(AutoEncoder, self).__init__()

self.encoder = Encoder(feedback_bits) # 定义编码函数

self.decoder = Decoder(feedback_bits) # 定义解码函数

def forward(self, x):

feature = self.encoder(x) # 调用编码

out = self.decoder(feature) # 调用解码

return out

#=======================================================================================================================

#=======================================================================================================================

# NMSE Function Defining

def NMSE(x, x_hat):

# 计算NMSE均方误差,x为实际值,x_hat为估计值

x_real = np.reshape(x[:, :, :, 0], (len(x), -1))

# 取x的实部层 len(x)是返回矩阵长度,不是元素个数

x_imag = np.reshape(x[:, :, :, 1], (len(x), -1))

# 取x的虚部层

x_hat_real = np.reshape(x_hat[:, :, :, 0], (len(x_hat), -1))

# 实部虚部同时减0.5是将(0,1)的数搬移至(-0.5,0.5),使其中心为0

x_hat_imag = np.reshape(x_hat[:, :, :, 1], (len(x_hat), -1))

x_C = x_real - 0.5 + 1j * (x_imag - 0.5)

x_hat_C = x_hat_real - 0.5 + 1j * (x_hat_imag - 0.5)

power = np.sum(abs(x_C) ** 2, axis=1)

# axis= 1表示对第二外层[]里的最大单位块做块与块之间的运算,同时移除第二外层[]

mse = np.sum(abs(x_C - x_hat_C) ** 2, axis=1)

nmse = np.mean(mse / power)

return nmse

def Score(NMSE):

score = 1 - NMSE

return score

#=======================================================================================================================

#=======================================================================================================================

# Data Loader Class Defining

class DatasetFolder(Dataset):

def __init__(self, matData):

self.matdata = matData # 矩阵类数据

def __getitem__(self, index):

return self.matdata[index] # 索引得到元素

def __len__(self):

return self.matdata.shape[0]# 求len

# modelTrain.py

#=======================================================================================================================

#=======================================================================================================================

import numpy as np

import torch

from modelDesign import AutoEncoder,DatasetFolder #*

import os

import torch.nn as nn

import scipy.io as sio # 无法导入MATLABv7版本的mat

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

#=======================================================================================================================

#=======================================================================================================================

# Parameters Setting for Data

NUM_FEEDBACK_BITS = 128 # 反馈数据比特数

CHANNEL_SHAPE_DIM1 = 16 # Nc

CHANNEL_SHAPE_DIM2 = 32 # Nt 发射天线数

CHANNEL_SHAPE_DIM3 = 2 # 实部虚部

# Parameters Setting for Training

BATCH_SIZE = 128 # 原始为128

EPOCHS = 1000

LEARNING_RATE = 1e-3

PRINT_RREQ = 100 # 每100个数据输出一次

torch.manual_seed(1) # 随机种子初始化神经网络

#=======================================================================================================================

#=======================================================================================================================

# Data Loading

# mat = sio.loadmat('channelData/Hdata.mat')

# # data = mat['H_4T4R']

import h5py

mat = h5py.File('./channelData/Hdata.mat',"r") # 读数据

data = np.transpose(mat['H_train']) # shape=(320000, 1024)

data = data.astype('float32')

data = np.reshape(data, (len(data), CHANNEL_SHAPE_DIM1, CHANNEL_SHAPE_DIM2, CHANNEL_SHAPE_DIM3))

# data 320000x32x16x2

# data = np.transpose(data, (0, 3, 1, 2))

split = int(data.shape[0] * 0.7)

# 70% 数据训练,30% 数据测试

data_train, data_test = data[:split], data[split:]

train_dataset = DatasetFolder(data_train)

# 将data_train转为DatasetFolder类,可以调用其中的方法

train_loader = torch.utils.data.DataLoader(train_dataset, batch_size=BATCH_SIZE, shuffle=True, num_workers=0, pin_memory=True)

# 320000*0.7/16 = 14000

# 每个batch加载多少个数据 设置为True时会在每个epoch重新打乱数据 用多少个子进程加载数据。0表示数据将在主进程中加载(默认: 0)

# 如果设置为True,那么data loader将会在返回它们之前,将tensors拷贝到CUDA中的固定内存(CUDA pinned memory)中

test_dataset = DatasetFolder(data_test)

test_loader = torch.utils.data.DataLoader(test_dataset, batch_size=BATCH_SIZE, shuffle=False, num_workers=0, pin_memory=True)

#=======================================================================================================================

#=======================================================================================================================

# Model Constructing

autoencoderModel = AutoEncoder(NUM_FEEDBACK_BITS).to(device) # 调用自动编码解码函数

autoencoderModel = autoencoderModel.cuda().to(device) # 转为GPU

criterion = nn.MSELoss().cuda().to(device) # 用MSE作为损失函数 转为GPU

optimizer = torch.optim.Adam(autoencoderModel.parameters(), lr=LEARNING_RATE)

# 优化器 :Adam 优化算法 # 调用网络参数

#=======================================================================================================================

#=======================================================================================================================

# Model Training and Saving

bestLoss = 1 # 最大损失,小于最大损失才会保存模型

for epoch in range(EPOCHS):

autoencoderModel.train().to(device)

# 开始训练 启用 Batch Normalization 和 Dropout。

# Dropout是随机取一部分网络连接来训练更新参数

for i, autoencoderInput in enumerate(train_loader):

# 遍历训练数据

autoencoderInput = autoencoderInput.cuda().to(device) # 转为GPU格式

autoencoderOutput = autoencoderModel(autoencoderInput).to(device) # 使用模型求输出

loss = criterion(autoencoderOutput, autoencoderInput).to(device) # 输入输出传入评价函数 求均方误差

optimizer.zero_grad() # 清空过往梯度;

loss.backward() # 反向传播,计算当前梯度;

optimizer.step() # 根据梯度更新网络参数

if i % PRINT_RREQ == 0:

print('Epoch: [{0}][{1}/{2}]\t' 'Loss {loss:.4f}\t'.format(epoch, i, len(train_loader), loss=loss.item()))

# Model Evaluating 模型评估

autoencoderModel.eval().to(device)

# 不启用 Batch Normalization 和 Dropout

# 是保证BN层能够用全部训练数据的均值和方差,即测试过程中要保证BN层的均值和方差不变。

totalLoss = 0

# torch.no_grad()内的内容,不被track梯度

# 该计算不会在反向传播中被记录。

with torch.no_grad():

for i, autoencoderInput in enumerate(test_loader):

# 加载测试数据

autoencoderInput = autoencoderInput.cuda().to(device) # 转为GPU格式

autoencoderOutput = autoencoderModel(autoencoderInput).to(device) # 求输出

totalLoss += criterion(autoencoderOutput, autoencoderInput).item() * autoencoderInput.size(0)

# size(0)就是batch size的大小

# 用测试数据来测试模型的损失

averageLoss = totalLoss / len(test_dataset) # 求每一个EPOCHS后的平均损失

if averageLoss < bestLoss: # 平均损失如果小于1才会保存模型

# Model saving

# Encoder Saving

torch.save({'state_dict': autoencoderModel.encoder.state_dict(), }, './modelSubmit/encoder.pth.tar')

# Decoder Saving

torch.save({'state_dict': autoencoderModel.decoder.state_dict(), }, './modelSubmit/decoder.pth.tar')

print("Model saved")

bestLoss = averageLoss # 更新最大损失,使损失小于该值是才保存模型

#=======================================================================================================================

#=======================================================================================================================

# modelEvalEnc.py

#=======================================================================================================================

#=======================================================================================================================

import numpy as np

from modelDesign import *

import torch

import scipy.io as sio

#=======================================================================================================================

#=======================================================================================================================

# Parameters Setting

NUM_FEEDBACK_BITS = NUM_FEEDBACK_BITS #128

CHANNEL_SHAPE_DIM1 = 16

CHANNEL_SHAPE_DIM2 = 32

CHANNEL_SHAPE_DIM3 = 2

#=======================================================================================================================

#=======================================================================================================================

# Data Loading

import h5py

mat = h5py.File('./channelData/Hdata.mat',"r")

data = np.transpose(mat['H_train']) # shape=(320000, 1024)

data = data.astype('float32')

data = np.reshape(data, (len(data), CHANNEL_SHAPE_DIM1, CHANNEL_SHAPE_DIM2, CHANNEL_SHAPE_DIM3))

# reshape 到320000x32x16x2

H_test = data

test_dataset = DatasetFolder(H_test)

# 转成DatasetFolder类

test_loader = torch.utils.data.DataLoader(test_dataset, batch_size=512, shuffle=False, num_workers=0, pin_memory=True)

# 转成方便训练的数据格式 一次编码512组数据 每次不打乱数据 用多少个子进程加载数据。0表示数据将在主进程中加载(默认: 0)

# 如果设置为True,那么data loader将会在返回它们之前,将tensors拷贝到CUDA中的固定内存(CUDA pinned memory)中

#=======================================================================================================================

#=======================================================================================================================

# Model Loading

autoencoderModel = AutoEncoder(NUM_FEEDBACK_BITS).cuda()

model_encoder = autoencoderModel.encoder # 取编码的这个属性

model_encoder.load_state_dict(torch.load('./modelSubmit/encoder.pth.tar')['state_dict'])

# load_state_dict是net的一个方法

# 是将torch.load加载出来的数据加载到net中

# load

# 加载的是训练好的模型

print("weight loaded")

#=======================================================================================================================

#=======================================================================================================================

# Encoding

model_encoder.eval()

encode_feature = []

with torch.no_grad():

for i, autoencoderInput in enumerate(test_loader):

# 一次处理512组数据 一共处理625次 共计320000组数据

autoencoderInput = autoencoderInput.cuda()

autoencoderOutput = model_encoder(autoencoderInput)

autoencoderOutput = autoencoderOutput.cpu().numpy()

if i == 0:

encode_feature = autoencoderOutput

else:

encode_feature = np.concatenate((encode_feature, autoencoderOutput), axis=0)

# concatenate数组拼接 把每一次得到的结果合并起来 按列的方向合并

print("feedbackbits length is ", np.shape(encode_feature)[-1])

np.save('./encOutput.npy', encode_feature)

print('Finished!')

#=======================================================================================================================

#=======================================================================================================================

# modelEvalDec.py

#=======================================================================================================================

#=======================================================================================================================

import numpy as np

from modelDesign import *

import torch

import scipy.io as sio

#=======================================================================================================================

#=======================================================================================================================

# Parameters Setting

NUM_FEEDBACK_BITS = NUM_FEEDBACK_BITS #128

CHANNEL_SHAPE_DIM1 = 16

CHANNEL_SHAPE_DIM2 = 32

CHANNEL_SHAPE_DIM3 = 2

#=======================================================================================================================

#=======================================================================================================================

# Data Loading

import h5py

mat = h5py.File('./channelData/Hdata.mat',"r")

data = np.transpose(mat['H_train']) # shape=(320000, 1024)

data = data.astype('float32')

data = np.reshape(data, (len(data), CHANNEL_SHAPE_DIM1, CHANNEL_SHAPE_DIM2, CHANNEL_SHAPE_DIM3))

H_test = data

# encOutput Loading

encode_feature = np.load('./encOutput.npy')

test_dataset = DatasetFolder(encode_feature)

test_loader = torch.utils.data.DataLoader(test_dataset, batch_size=512, shuffle=False, num_workers=0, pin_memory=True)

#=======================================================================================================================

#=======================================================================================================================

# Model Loading and Decoding

autoencoderModel = AutoEncoder(NUM_FEEDBACK_BITS).cuda()

model_decoder = autoencoderModel.decoder

model_decoder.load_state_dict(torch.load('./modelSubmit/decoder.pth.tar')['state_dict'])

print("weight loaded")

model_decoder.eval()

H_pre = []

with torch.no_grad():

for i, decoderOutput in enumerate(test_loader):

# convert numpy to Tensor

# 一次解码512组数据 一共解码625次

decoderOutput = decoderOutput.cuda()

output = model_decoder(decoderOutput)

output = output.cpu().numpy()

if i == 0:

H_pre = output

else:

H_pre = np.concatenate((H_pre, output), axis=0)

# axis=0 按列的方向合并

# if (NMSE(H_test, H_pre) < 0.1):

# # 计算均方误差

# print('Valid Submission')

# print('The Score is ' + np.str(1.0 - NMSE(H_test, H_pre)))

# print('Finished!')

print(np.str(1.0 - NMSE(H_test, H_pre)))

#=======================================================================================================================

#=======================================================================================================================