N1CTF2021 Collision exp解析write up

Collision

本人并没有在n1ctf2021中做出这道题,本文的最终exp是根据出题人提供的exp修改得到的。我将在本文中分享我的做这道题的经历以及解题exp的分析

原始exp链接:

https://github.com/Nu1LCTF/n1ctf-2021/blob/main/Misc/collision/exp.py

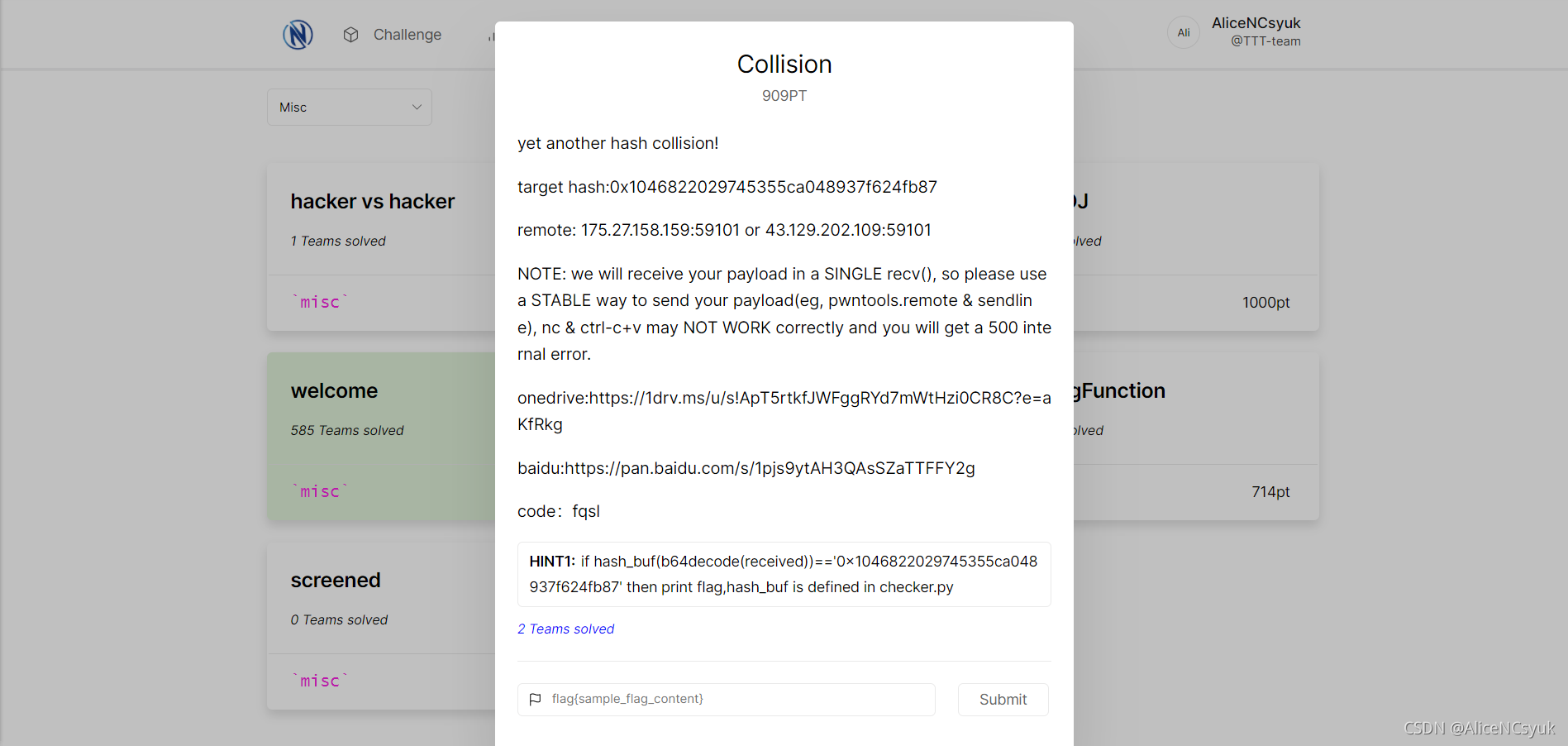

题面描述如下:

附件链接如下:

链接:https://pan.baidu.com/s/1tYfzP7YOiB8UEGwDYdMNbg

提取码:7zgc

里面包含了一个代码和一个模型的参数,代码如下

import base64

import numpy as np

import torch

import torch.nn as nn

import torch.nn.functional as F

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

self.conv1 = nn.Conv2d(1, 32, 3, 1)

self.conv2 = nn.Conv2d(32, 64, 3, 1)

self.dropout1 = nn.Dropout(0.25)

self.dropout2 = nn.Dropout(0.5)

self.fc1 = nn.Linear(9216, 128)

self.fc2 = nn.Linear(128, 10)

def forward(self, x):

x = self.conv1(x)

x = F.relu(x)

x = self.conv2(x)

x = F.relu(x)

x = F.max_pool2d(x, 2)

x = self.dropout1(x)

x = torch.flatten(x, 1)

x = self.fc1(x)

return x

def load_model(path):

model = Net()

model.load_state_dict(torch.load(path, map_location="cpu"))

return model.eval()

model = load_model("convNet.pt")

def hex_hash(hash_bits):

x = hash_bits.detach().numpy()

res = [str(int(i > 0)) for i in x[0]]

return hex(int(''.join(res), 2))

L0_THRES = 54.1

L2_THRES = 6.45

src = np.frombuffer(base64.b64decode(

'AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAA5eTkPgAAgD/l5GQ+AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAOPiYj8AAIA/5eRkPgAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAOno6D0AAIA/5eTkPgAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAADl5OQ+AACAP62srD4AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAx8ZGPwAAgD/p6Og9AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAA6ejoPQAAgD/j4mI/AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAI6NDT8AAIA/jo0NPwAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAOXkZD6trKw+raysPo6NDT/l5GQ+AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAACrqio/AACAP62srD4AAAAAAAAAAAAAAAAAAAAAAAAAAOXkZD7j4mI/AACAPwAAgD8AAIA/AACAP+XkZD4AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAq6oqPwAAgD+trKw+AAAAAAAAAAAAAAAAAAAAAAAAAACrqio/AACAPwAAgD/l5OQ+5eRkPuPiYj/HxkY/AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAKuqKj8AAIA/raysPgAAAAAAAAAAAAAAAAAAAADl5GQ+AACAPwAAgD+trKw+AAAAAAAAAACrqio/AACAPwAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAACrqio/AACAP62srD4AAAAAAAAAAAAAAAAAAAAAq6oqPwAAgD+trKw+AAAAAAAAAAAAAAAAq6oqPwAAgD+trKw+AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAjo0NPwAAgD/l5OQ+AAAAAAAAAAAAAAAA6ejoPQAAgD/HxkY/AAAAAAAAAAAAAAAAAAAAAKuqKj8AAIA/raysPgAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAOno6D0AAIA/AACAPwAAAAAAAAAAAAAAAKuqKj8AAIA/5eTkPgAAAAAAAAAAAAAAAAAAAADHxkY/4+JiPwAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAx8ZGPwAAgD/l5OQ+AAAAAAAAAACrqio/AACAP62srD4AAAAAAAAAAAAAAACtrKw+AACAP+Xk5D4AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAK2srD4AAIA/AACAP6uqKj/l5GQ+AACAPwAAgD/p6Og9AAAAAAAAAACtrKw+4+JiP+PiYj/p6Og9AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAA5eTkPuPiYj8AAIA/AACAPwAAgD8AAIA/raysPq2srD6rqio/AACAPwAAgD/l5GQ+AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAADp6Og9jo0NPwAAgD8AAIA/AACAPwAAgD8AAIA/AACAP8fGRj/p6Og9AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAA4+JiPwAAgD8AAIA/jo0NP62srD4AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAI6NDT8AAIA/AACAP6uqKj+rqio/5eRkPgAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAjo0NP+PiYj+rqio/5eTkPuno6D0AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAA=='),

dtype="float32")

def hash_buf(buf):

global src

arr = np.frombuffer(buf, dtype="float32")

if arr.shape[0] != 28 * 28:

raise BufferError("hacker")

arr = np.clip(arr, 0, 1)

diff = arr - src

#print(np.linalg.norm(diff, 0), np.linalg.norm(diff, 2))

if np.linalg.norm(diff, 0) > L0_THRES or np.linalg.norm(diff, 2) > L2_THRES:

raise BufferError("attack failed")

arr = torch.FloatTensor(arr).reshape(1, 1, 28, 28)

# need=0x1046822029745355ca048937f624fb87

# hash(src)=0x91460a702d62d166a9942cb7fc10d7a2

return hex_hash(model(arr))

让我们分析代码,首先给出了一个CNN模型Net

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

self.conv1 = nn.Conv2d(1, 32, 3, 1)

self.conv2 = nn.Conv2d(32, 64, 3, 1)

self.dropout1 = nn.Dropout(0.25)

self.dropout2 = nn.Dropout(0.5)

self.fc1 = nn.Linear(9216, 128)

self.fc2 = nn.Linear(128, 10)

def forward(self, x):

x = self.conv1(x)

x = F.relu(x)

x = self.conv2(x)

x = F.relu(x)

x = F.max_pool2d(x, 2)

x = self.dropout1(x)

x = torch.flatten(x, 1)

x = self.fc1(x)

return x

然后load_model函数负责载入已有的convNet.pt模型参数

def load_model(path):

model = Net()

model.load_state_dict(torch.load(path, map_location="cpu"))

return model.eval()

model = load_model("convNet.pt")

hex_hash函数用于生成hash值,其具体方法是将给定的numpy数组的值映射为0和1,当数组元素小于等于0则这一位对应的hash值为0反之为1。

def hex_hash(hash_bits):

x = hash_bits.detach().numpy()

res = [str(int(i > 0)) for i in x[0]]

return hex(int(''.join(res), 2))

接下来给定了图像攻击题目中常见的l0和l2范数约束,以及作为对照的图片的base64的值

L0_THRES = 54.1

L2_THRES = 6.45

src = np.frombuffer(base64.b64decode(

'AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAA5eTkPgAAgD/l5GQ+AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAOPiYj8AAIA/5eRkPgAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAOno6D0AAIA/5eTkPgAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAADl5OQ+AACAP62srD4AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAx8ZGPwAAgD/p6Og9AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAA6ejoPQAAgD/j4mI/AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAI6NDT8AAIA/jo0NPwAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAOXkZD6trKw+raysPo6NDT/l5GQ+AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAACrqio/AACAP62srD4AAAAAAAAAAAAAAAAAAAAAAAAAAOXkZD7j4mI/AACAPwAAgD8AAIA/AACAP+XkZD4AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAq6oqPwAAgD+trKw+AAAAAAAAAAAAAAAAAAAAAAAAAACrqio/AACAPwAAgD/l5OQ+5eRkPuPiYj/HxkY/AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAKuqKj8AAIA/raysPgAAAAAAAAAAAAAAAAAAAADl5GQ+AACAPwAAgD+trKw+AAAAAAAAAACrqio/AACAPwAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAACrqio/AACAP62srD4AAAAAAAAAAAAAAAAAAAAAq6oqPwAAgD+trKw+AAAAAAAAAAAAAAAAq6oqPwAAgD+trKw+AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAjo0NPwAAgD/l5OQ+AAAAAAAAAAAAAAAA6ejoPQAAgD/HxkY/AAAAAAAAAAAAAAAAAAAAAKuqKj8AAIA/raysPgAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAOno6D0AAIA/AACAPwAAAAAAAAAAAAAAAKuqKj8AAIA/5eTkPgAAAAAAAAAAAAAAAAAAAADHxkY/4+JiPwAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAx8ZGPwAAgD/l5OQ+AAAAAAAAAACrqio/AACAP62srD4AAAAAAAAAAAAAAACtrKw+AACAP+Xk5D4AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAK2srD4AAIA/AACAP6uqKj/l5GQ+AACAPwAAgD/p6Og9AAAAAAAAAACtrKw+4+JiP+PiYj/p6Og9AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAA5eTkPuPiYj8AAIA/AACAPwAAgD8AAIA/raysPq2srD6rqio/AACAPwAAgD/l5GQ+AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAADp6Og9jo0NPwAAgD8AAIA/AACAPwAAgD8AAIA/AACAP8fGRj/p6Og9AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAA4+JiPwAAgD8AAIA/jo0NP62srD4AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAI6NDT8AAIA/AACAP6uqKj+rqio/5eRkPgAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAjo0NP+PiYj+rqio/5eTkPuno6D0AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAA=='),

dtype="float32")

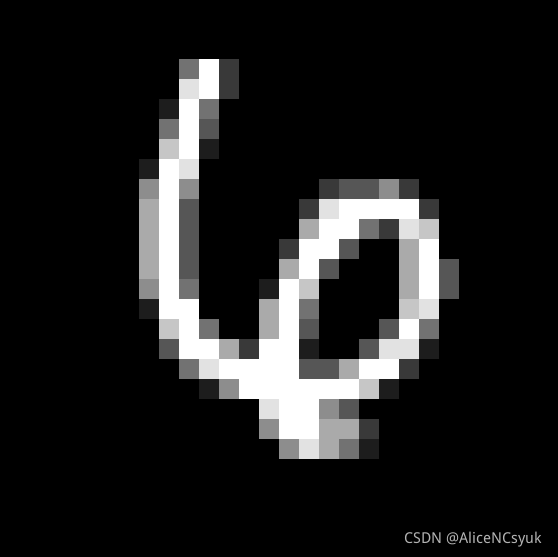

这张图dump出来我们可以发现是下图mnist数据集中的4,这提示了我们训练模型所使用的数据集,这张图在之后的训练中还会用到

同时也解释了为什么模型最后输出层单元数为10,因为这出题时是一个十分类任务

最后则是本题的核心函数hash_buf,这个函数做了这些事情:首先它会接收来自选手发送的图片的base64解码后的比特流,然后将其转换为numpy float32数组,然后判断大小是否为28*28(mnist数据集每张图片都是单通道28x28大小),然后将图像像素值强制约束到[0,1],也就是如果像素值小于0则会变成0,大于1变成1,其他不变。

global src

arr = np.frombuffer(buf, dtype="float32")

if arr.shape[0] != 28 * 28:

raise BufferError("hacker")

arr = np.clip(arr, 0, 1)

然后求出选手发送的图像与src的差值,求出l0和l2,如果不满足要求则失败,若满足要求,将发送的图像送入网络,取出倒数第二层的特征值数组转换为numpy后求hash值,如果为need的值,也就是need=0x1046822029745355ca048937f624fb87,则可以拿到flag

diff = arr - src

#print(np.linalg.norm(diff, 0), np.linalg.norm(diff, 2))

if np.linalg.norm(diff, 0) > L0_THRES or np.linalg.norm(diff, 2) > L2_THRES:

raise BufferError("attack failed")

arr = torch.FloatTensor(arr).reshape(1, 1, 28, 28)

# need=0x1046822029745355ca048937f624fb87

# hash(src)=0x91460a702d62d166a9942cb7fc10d7a2

return hex_hash(model(arr))

总的来说,为了解开这题,我们需要生成一张hash值(卷积得到的特征值)为0x1046822029745355ca048937f624fb87且和原图差别不大(满足给定的l0和l2约束)的攻击图片

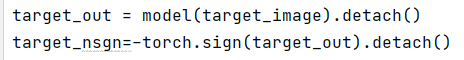

首先给出完整的exp的攻击部分,来自出题人

model = load_model("./model/convNet.pt")

target_image=torch.FloatTensor(np.load("mnist.npz")['test_images'][8583]).reshape(1,1,28,28)

target_out = model(target_image).detach()

target_nsgn=-torch.sign(target_out).detach()

image = torch.FloatTensor(np.load("mnist.npz")['test_images'][22]).reshape(1,1,28,28)#这个就是src

adv=Variable(image,requires_grad=True)

loss_f=nn.L1Loss()

loss_l2=nn.MSELoss()

def attack():

global adv

max_sim = 0

cnt = 0

loss_cnt = 0

losses = []

lr=0.1

RATIO=10

itercnt=0

best = 9999

best_l0 = 9999

best_l2 = 9999

save_image(image.squeeze().detach().numpy(), 'origion.tiff')

save_image(target_image.squeeze().detach().numpy(), 'target.tiff')

for i in range(10000):

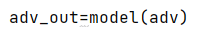

adv_out=model(adv)

l1l=loss_f(adv,image)*RATIO

hashl=torch.sum(F.relu(target_nsgn*adv_out))

l2l=loss_l2(adv,image)

loss=l1l+hashl

loss.backward()

adv.requires_grad=False

if hex_hash(adv_out)==hex_hash(target_out) and torch.sum(adv!=image).numpy() == 54 and l2l < 0.053:

break

adv=adv-adv.grad*lr

adv=adv.clamp(0,1)

if hex_hash(adv_out)==hex_hash(target_out):

best=min(best,torch.sum(torch.abs(adv-image)>0.005).numpy())

best_l0=min(best_l0,torch.sum(adv!=image).numpy())

best_l2 = min(best_l2, l2l)

itercnt+=1

mask=(torch.abs(adv-image)<np.clip(0.02+0.04*itercnt,0.02,0.4)).type(torch.FloatTensor)

adv=adv*(1-mask)+image*mask

adv.requires_grad = True

save_image(adv.squeeze().detach().numpy(), 'collision_pic.tiff')

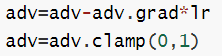

首先可以看出这个攻击代码的主体算法是FGSM

FGSM在这里起到使得虚假图像的hash和目标hash更加相似的作用:这是因为我们的图像梯度是通过计算target_nsgn(目标特征层,这里将其设置为小于等于0时为-1和大于0为1)和我们的攻击图像的adv_out得到的,使用fgsm就可以使得图像在梯度上沿着使得俩特征层hash值相同的方向移动,因此最终可以使得攻击图像的hash值和目标hash相同。以上就是FGSM在本题中work的原因

我认为exp中对于hash值的损失函数的设计是本exp的一大亮点,不过需要提醒的是,这种hash_loss不是万能的,使用不同网络可能需要重新设计loss。在这里做着将俩hash相乘,其中一个hash意见事先乘以-1,在使用激活函数relu筛去符号相同的位后求和。因为损失函数为0是,攻击图像的hash值和目标hash值每一位正负号完全相同,所以这个损失函数可以有效地使得攻击图像的hash值朝着目标hash移动

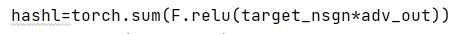

解决了hash相同的问题,接下来让我们再看一看如何解决l0和l2的问题。我们可以这样来理解l0和l2:l0起到约束我们改变多少像素的作用而l2则起到约束我们两张图相似性的作用。因此我们可以使用l1损失函数nn.L1Loss()来对l0进行约束(这是因为numpy计算l0范数是sum(x!=0)),使用nn.MSEloss()对l2进行约束。

实际调参时发现只需要关注l1损失,再添加l2损失反而l0和l2都无法达到要求。然后为了更加方便地满足l0和l2约束,我们将初始攻击图像设置为src,也就是之前被base64编码的图片

当然仅仅有损失函数的约束还不够,损失函数是一种偏软的约束,它虽然可以达到hash值相同的结果,但是由于其作用范围和FGSM都是在整个图像上进行的,则往往生成的攻击图像与对比图像在很多像素值上有细小的区别,这将导致难以通过较硬的l0约束

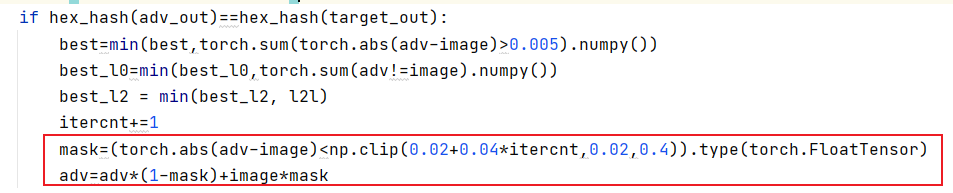

因此出题人使用了一种启发式的硬mask来解决这个问题,具体mask生成方式如下:

在hash值可以相等的前提下,这个mask将会筛去攻击图像中和对比图像差值小于阈值sita=np.clip(0.02+0.04*itercnt,0.02,0.4))的像素并将其替代为对比图像的像素值以便减小l0的值。启发式体现在,mask的sita阈值随着迭代次数的增加不断变大,最终不大于0.4,这将鼓励攻击图像生成数量更少但是对于hash值影响更大的像素,从而降低l0和l2

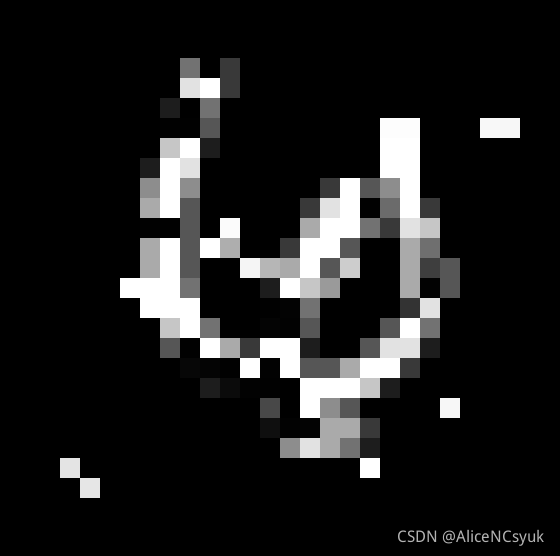

最终生成的攻击图像如下:

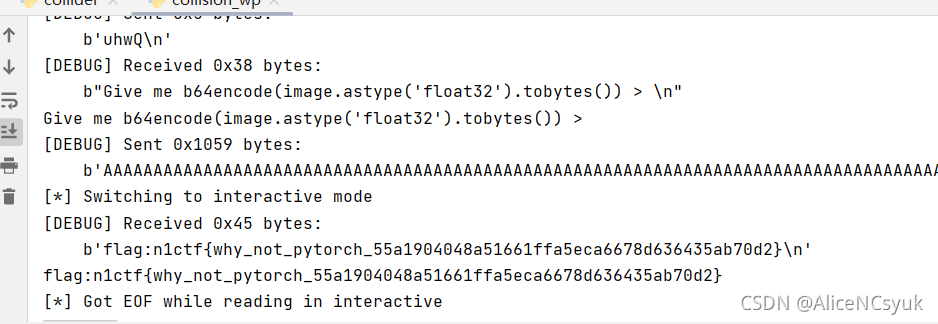

得到flag如下:

完整攻击脚本如下:

import numpy as np

import torch

import torch.nn as nn

import torch.nn.functional as F

from torch.autograd import Variable

from pwn import *

from PIL import Image

import hashlib

import string

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

self.conv1 = nn.Conv2d(1, 32, 3, 1)

self.conv2 = nn.Conv2d(32, 64, 3, 1)

self.dropout1 = nn.Dropout(0.25)

self.dropout2 = nn.Dropout(0.5)

self.fc1 = nn.Linear(9216, 128)

self.fc2 = nn.Linear(128, 10)

def forward(self, x):

x = self.conv1(x)

x = F.relu(x)

x = self.conv2(x)

x = F.relu(x)

x = F.max_pool2d(x, 2)

x = self.dropout1(x)

x = torch.flatten(x, 1)

x = self.fc1(x)

return x

def load_model(path):

model = Net()

model.load_state_dict(torch.load(path, map_location="cpu"))

return model.eval()

def save_image(arr, path):

im = Image.fromarray(arr)

im.save(path)

def get_hash_sim(adv_hash, std_hash):

cnt = 0

for i in range(len(adv_hash[0])):

tmp1 = adv_hash[0][i] > 0

tmp2 = std_hash[0][i] > 0

if tmp1 != tmp2:

cnt += 1

return 1 - (cnt / len(adv_hash[0]))

def cal_hash_bits(out):

return out

def hex_hash(hash_bits):

x = hash_bits.detach().numpy()

res = [str(int(i > 0)) for i in x[0]]

return hex(int(''.join(res), 2))

model = load_model("./model/convNet.pt")

target_image=torch.FloatTensor(np.load("mnist.npz")['test_images'][8583]).reshape(1,1,28,28)

target_out = model(target_image).detach()

target_nsgn=-torch.sign(target_out).detach()

image = torch.FloatTensor(np.load("mnist.npz")['test_images'][22]).reshape(1,1,28,28)

adv=Variable(image,requires_grad=True)

loss_f=nn.L1Loss()

loss_l2=nn.MSELoss()

def hex_hash(hash_bits):

x = hash_bits.detach().numpy()

res = [str(int(i > 0)) for i in x[0]]

return hex(int(''.join(res), 2))

L0_THRES = 54.1

L2_THRES = 6.45

src = np.frombuffer(base64.b64decode(

'AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAA5eTkPgAAgD/l5GQ+AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAOPiYj8AAIA/5eRkPgAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAOno6D0AAIA/5eTkPgAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAADl5OQ+AACAP62srD4AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAx8ZGPwAAgD/p6Og9AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAA6ejoPQAAgD/j4mI/AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAI6NDT8AAIA/jo0NPwAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAOXkZD6trKw+raysPo6NDT/l5GQ+AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAACrqio/AACAP62srD4AAAAAAAAAAAAAAAAAAAAAAAAAAOXkZD7j4mI/AACAPwAAgD8AAIA/AACAP+XkZD4AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAq6oqPwAAgD+trKw+AAAAAAAAAAAAAAAAAAAAAAAAAACrqio/AACAPwAAgD/l5OQ+5eRkPuPiYj/HxkY/AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAKuqKj8AAIA/raysPgAAAAAAAAAAAAAAAAAAAADl5GQ+AACAPwAAgD+trKw+AAAAAAAAAACrqio/AACAPwAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAACrqio/AACAP62srD4AAAAAAAAAAAAAAAAAAAAAq6oqPwAAgD+trKw+AAAAAAAAAAAAAAAAq6oqPwAAgD+trKw+AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAjo0NPwAAgD/l5OQ+AAAAAAAAAAAAAAAA6ejoPQAAgD/HxkY/AAAAAAAAAAAAAAAAAAAAAKuqKj8AAIA/raysPgAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAOno6D0AAIA/AACAPwAAAAAAAAAAAAAAAKuqKj8AAIA/5eTkPgAAAAAAAAAAAAAAAAAAAADHxkY/4+JiPwAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAx8ZGPwAAgD/l5OQ+AAAAAAAAAACrqio/AACAP62srD4AAAAAAAAAAAAAAACtrKw+AACAP+Xk5D4AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAK2srD4AAIA/AACAP6uqKj/l5GQ+AACAPwAAgD/p6Og9AAAAAAAAAACtrKw+4+JiP+PiYj/p6Og9AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAA5eTkPuPiYj8AAIA/AACAPwAAgD8AAIA/raysPq2srD6rqio/AACAPwAAgD/l5GQ+AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAADp6Og9jo0NPwAAgD8AAIA/AACAPwAAgD8AAIA/AACAP8fGRj/p6Og9AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAA4+JiPwAAgD8AAIA/jo0NP62srD4AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAI6NDT8AAIA/AACAP6uqKj+rqio/5eRkPgAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAjo0NP+PiYj+rqio/5eTkPuno6D0AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAA=='),

dtype="float32")

def hash_buf(buf):

global src

arr = np.frombuffer(buf, dtype="float32")

if arr.shape[0] != 28 * 28:

raise BufferError("hacker")

arr = np.clip(arr, 0, 1)

diff = arr - src

print(np.linalg.norm(diff, 0), np.linalg.norm(diff, 2))

if np.linalg.norm(diff, 0) > L0_THRES or np.linalg.norm(diff, 2) > L2_THRES:

raise BufferError("attack failed")

arr = torch.FloatTensor(arr).reshape(1, 1, 28, 28)

# need=0x1046822029745355ca048937f624fb87

# hash(src)=0x91460a702d62d166a9942cb7fc10d7a2

return hex_hash(model(arr))

def getsha256(s):

sha256=hashlib.sha256()

sha256.update(str(s).encode('utf-8'))

ans=sha256.hexdigest()

return ans

def attack():

global adv

# load model, seed, image

max_sim = 0

cnt = 0

loss_cnt = 0

losses = []

lr=0.1

RATIO=10

itercnt=0

best = 9999

best_l0 = 9999

best_l2 = 9999

save_image(image.squeeze().detach().numpy(), 'origion.tiff')

save_image(target_image.squeeze().detach().numpy(), 'target.tiff')

for i in range(10000):

adv_out=model(adv)

l1l=loss_f(adv,image)*RATIO

hashl=torch.sum(F.relu(target_nsgn*adv_out))

l2l=loss_l2(adv,image)

loss=l1l+hashl

loss.backward()

adv.requires_grad=False

data1 = adv.squeeze().detach().numpy().astype("float32").tobytes()

arr1 = np.frombuffer(data1, dtype="float32")

arr1 = np.clip(arr1, 0, 1)

diff = arr1 - src

a,b=(np.linalg.norm(diff, 0), np.linalg.norm(diff, 2))

if hex_hash(adv_out)==hex_hash(target_out) and a< L0_THRES and b < L2_THRES:

save_image(adv.squeeze().detach().numpy(), 'collision_pic.tiff')

data = adv.squeeze().detach().numpy().astype("float32").tobytes()

print(hash_buf(data))

r = remote("175.27.158.159", "59101")

context(log_level='debug')

tmpstr = r.recvline().decode().replace('\n', '')

sha_ans = tmpstr[45:109]

sha_str = tmpstr[12:40]

print(sha_str)

print(sha_ans)

print(tmpstr)

prefix = ''

flag = False

ss = string.ascii_letters

for i in ss:

if (flag):

break

for j in ss:

if (flag):

break

for k in ss:

if (flag):

break

for l in ss:

xxxx = i + j + k + l

if (sha_ans == getsha256(xxxx + sha_str)):

prefix = xxxx

print('in')

flag = True

break

print(prefix)

r.recvline()

r.sendline(prefix)

tt = r.recvline().decode().replace('\n', '')

print(tt)

r.sendline(base64.b64encode(data))

r.interactive()

exit(0)

adv=adv-adv.grad*lr

adv=adv.clamp(0,1)

update = f"Iteration #{i}: l1={l1l} l2loss={l2l} hashloss={hashl}"

print(update)

print(hex_hash(adv_out))

print(hex_hash(target_out))

print("diffcount",torch.sum(torch.abs(adv-image)>0.005))

print(f"l0 loss: {torch.sum(adv!=image)}")

print("best ", best)

print(f"best_l0: {best_l0}")

print(f"best_l2: {best_l2}")

if hex_hash(adv_out)==hex_hash(target_out):

best=min(best,torch.sum(torch.abs(adv-image)>0.005).numpy())

best_l0=min(best_l0,torch.sum(adv!=image).numpy())

best_l2 = min(best_l2, l2l)

itercnt+=1

mask=(torch.abs(adv-image)<np.clip(0.02+0.04*itercnt,0.02,0.4)).type(torch.FloatTensor)

adv=adv*(1-mask)+image*mask

adv.requires_grad = True

save_image(adv.squeeze().detach().numpy(), 'collision_pic.tiff')

if __name__ == "__main__":

attack()