kubead 部署 kubernetes 请查看

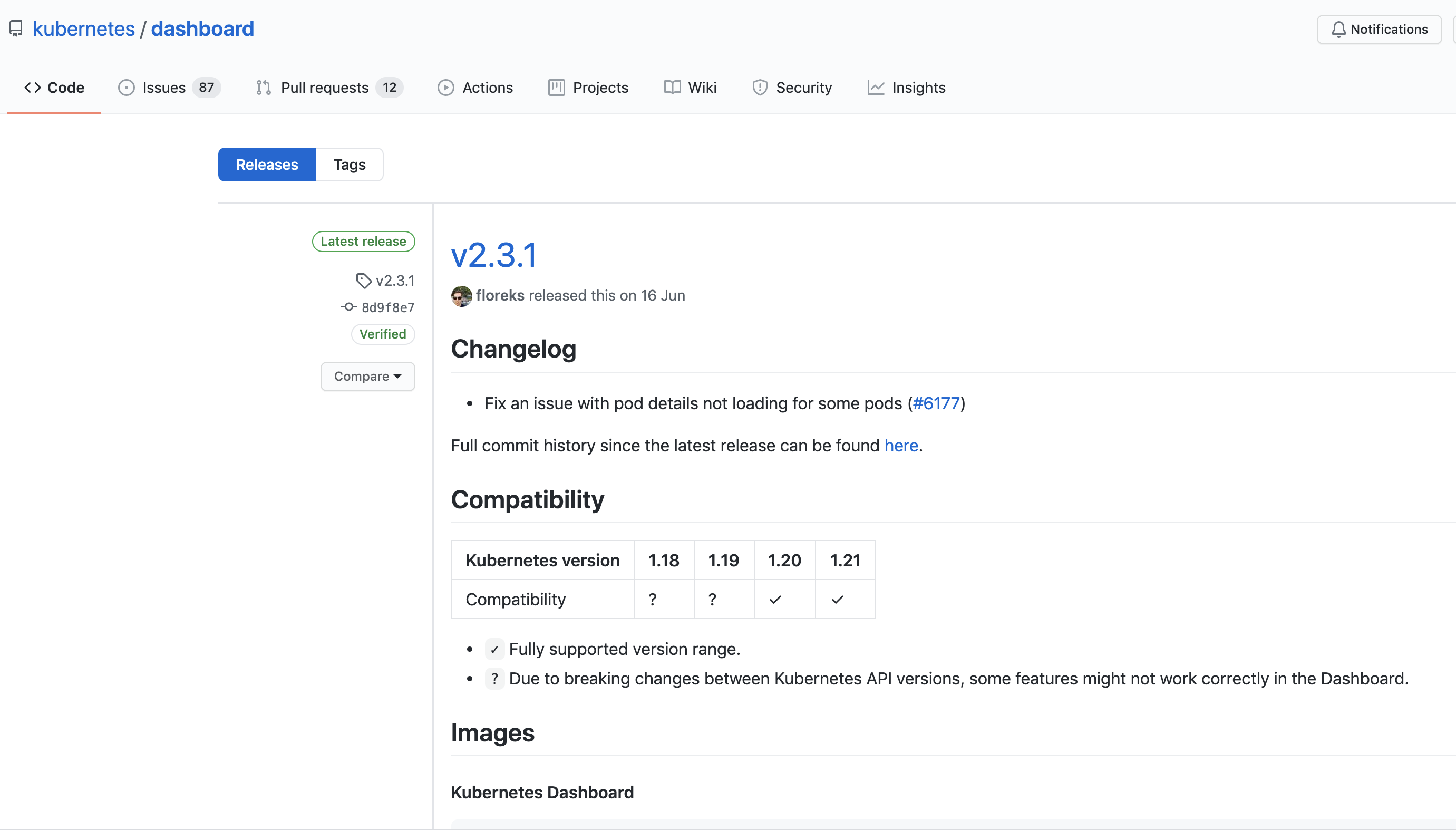

3 部署 dashboard

https://github.com/kubernetes/dashboard

3.1 部署 dashboard v2.3.1

[root@K8s-master1 ~]# wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.3.1/aio/deploy/recommended.yaml

[root@K8s-master1 ~]# mv recommended.yaml dashboard-2.3.1.yaml

# 修改 dashboard-2.3.1.yaml ,在文件的 40 行

[root@K8s-master1 ~]# cat dashboard-2.3.1.yaml

......

32 kind: Service

33 apiVersion: v1

34 metadata:

35 labels:

36 k8s-app: kubernetes-dashboard

37 name: kubernetes-dashboard

38 namespace: kubernetes-dashboard

39 spec:

40 type: NodePort # 增加此行

41 ports:

42 - port: 443

43 targetPort: 8443

44 nodePort: 30002 # 增加此行

45 selector:

46 k8s-app: kubernetes-dashboard

......

[root@K8s-master1 ~]# cat admin-user.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin-user

namespace: kubernetes-dashboard

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: admin-user

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: admin-user

namespace: kubernetes-dashboard

[root@K8s-master1 ~]#

[root@K8s-master1 ~]# kubectl apply -f dashboard-2.3.1.yaml -f admin-user.yaml

namespace/kubernetes-dashboard created

serviceaccount/kubernetes-dashboard created

service/kubernetes-dashboard created

secret/kubernetes-dashboard-certs created

secret/kubernetes-dashboard-csrf created

secret/kubernetes-dashboard-key-holder created

configmap/kubernetes-dashboard-settings created

role.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrole.rbac.authorization.k8s.io/kubernetes-dashboard created

rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

deployment.apps/kubernetes-dashboard created

service/dashboard-metrics-scraper created

deployment.apps/dashboard-metrics-scraper created

serviceaccount/admin-user created

clusterrolebinding.rbac.authorization.k8s.io/admin-user created

[root@K8s-master1 ~]#

[root@K8s-master1 ~]# kubectl get svc -n kubernetes-dashboard

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

dashboard-metrics-scraper ClusterIP 10.200.131.55 <none> 8000/TCP 12m

kubernetes-dashboard NodePort 10.200.39.13 <none> 443:30002/TCP 12m

[root@K8s-master1 ~]#

[root@K8s-master1 ~]# ss -tnl|grep 30002

LISTEN 0 128 0.0.0.0:30002 0.0.0.0:*

[root@K8s-master1 ~]#

查看 dashboard 状态

[root@K8s-master1 ~]# kubectl get pod -A | grep kubernetes-dashboard

kubernetes-dashboard dashboard-metrics-scraper-79c5968bdc-tkch8 1/1 Running 0 22m

kubernetes-dashboard kubernetes-dashboard-658485d5c7-t6g7k 1/1 Running 0 22m

[root@K8s-master1 ~]#

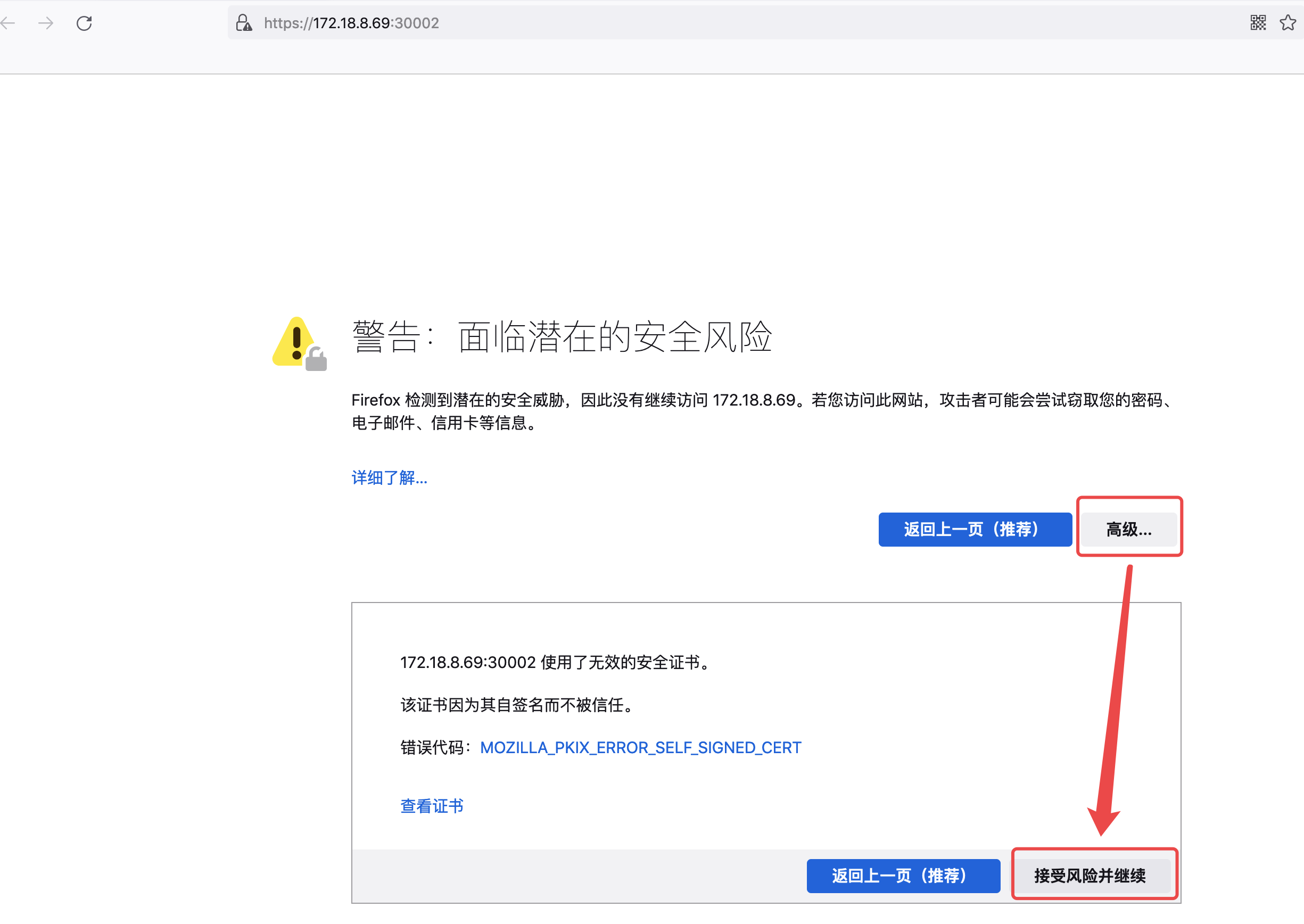

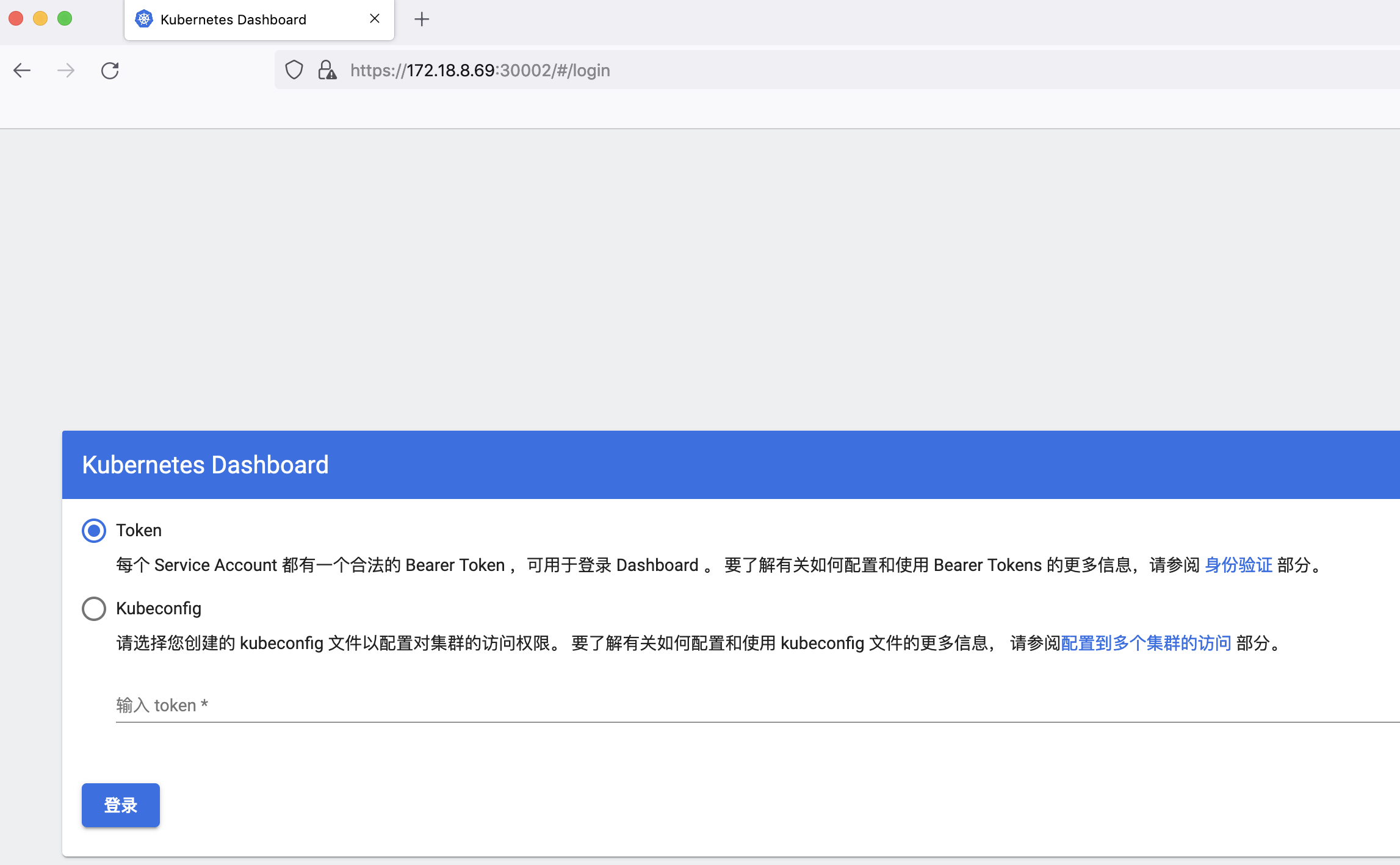

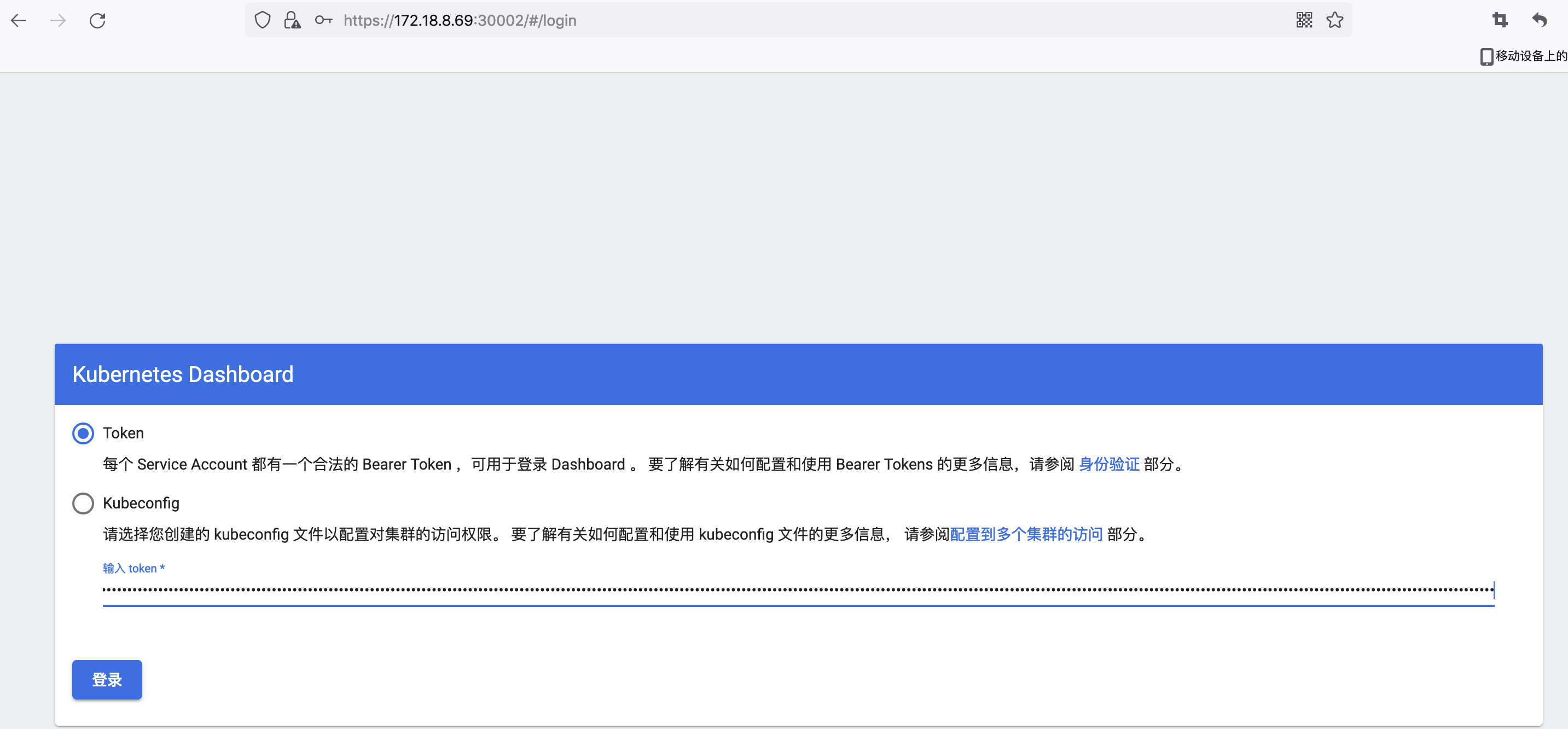

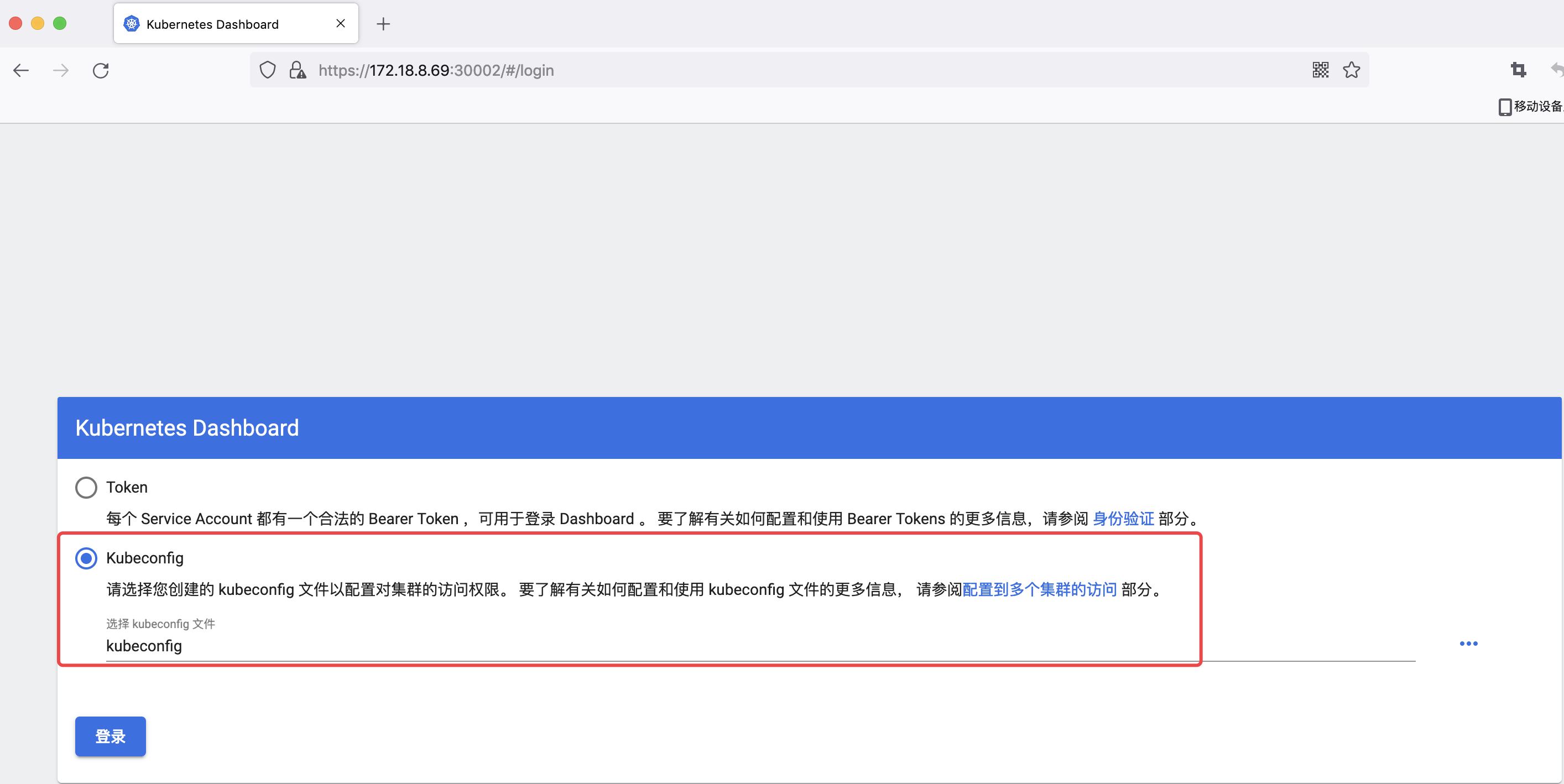

3.2 访问 dashboard

# 任意 node 节点的IP + port(30002)

https://172.18.8.69:30002/

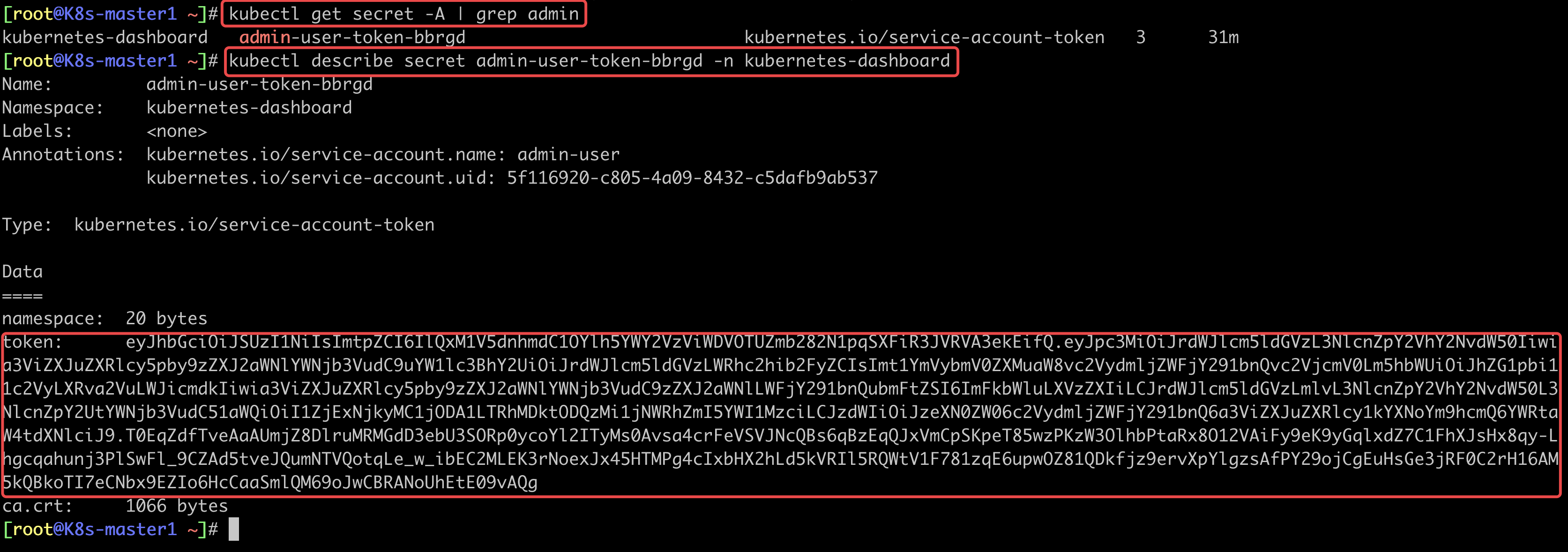

3.3 获取登录 token

[root@K8s-master1 ~]# kubectl get secret -A | grep admin

kubernetes-dashboard admin-user-token-bbrgd kubernetes.io/service-account-token 3 31m

[root@K8s-master1 ~]# kubectl describe secret admin-user-token-bbrgd -n kubernetes-dashboard

Name: admin-user-token-bbrgd

Namespace: kubernetes-dashboard

Labels: <none>

Annotations: kubernetes.io/service-account.name: admin-user

kubernetes.io/service-account.uid: 5f116920-c805-4a09-8432-c5dafb9ab537

Type: kubernetes.io/service-account-token

Data

====

namespace: 20 bytes

token: eyJhbGciOiJSUzI1NiIsImtpZCI6IlQxM1V5dnhmdC1OYlh5YWY2VzViWDVOTUZmb282N1pqSXFiR3JVRVA3ekEifQ.

eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Ii

wia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1l

c3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1Ym

VybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hb

WUiOiJhZG1pbi11c2VyLXRva2VuLWJicmdkIiwia3ViZXJu

ZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY2

91bnQubmFtZSI6ImFkbWluLXVzZXIiLCJrdWJlcm5ldGVz

LmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC

51aWQiOiI1ZjExNjkyMC1jODA1LTRhMDktODQzMi1jNWRh

ZmI5YWI1MzciLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY29

1bnQ6a3ViZXJuZXRlcy1kYXNoYm9hcmQ6YWRtaW4tdXNlci

J9.T0EqZdfTveAaAUmjZ8DlruMRMGdD3ebU3SORp0ycoYl2

ITyMs0Avsa4crFeVSVJNcQBs6qBzEqQJxVmCpSKpeT85wzPK

zW3OlhbPtaRx8O12VAiFy9eK9yGqlxdZ7C1FhXJsHx8qy-Lh

gcqahunj3PlSwFl_9CZAd5tveJQumNTVQotqLe_w_ibEC2ML

EK3rNoexJx45HTMPg4cIxbHX2hLd5kVRIl5RQWtV1F781zqE

6upwOZ81QDkfjz9ervXpYlgzsAfPY29ojCgEuHsGe3jRF0C2

rH16AM5kQBkoTI7eCNbx9EZIo6HcCaaSmlQM69oJwCBRANoUhEtE09vAQg

ca.crt: 1066 bytes

[root@K8s-master1 ~]#

将 token 拷贝到页面中即可登录 dashboard

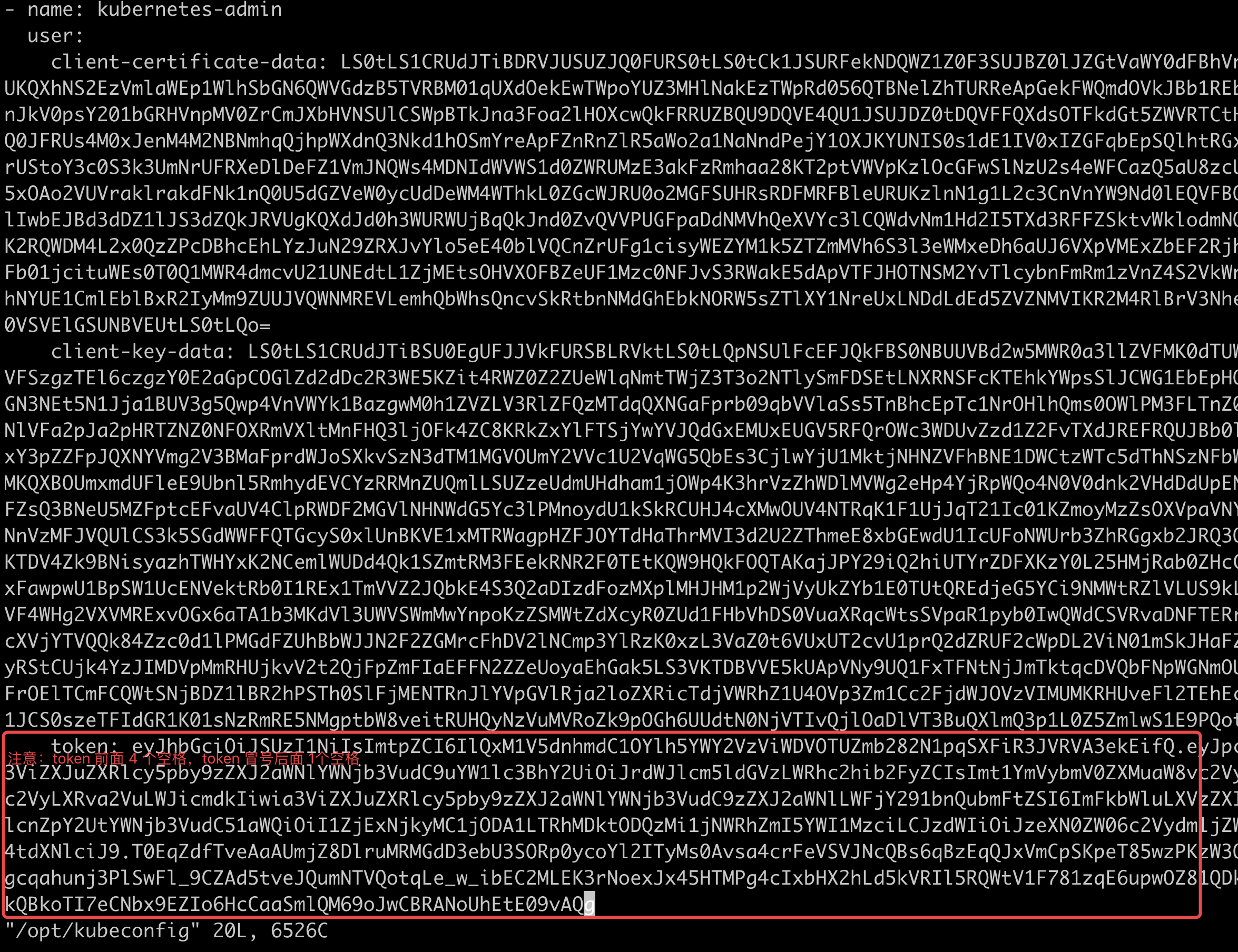

3.4 创建 kubeconfig 文件以配置对集群的访问权限

如果什么操作都不做,dashboard 的页面 15 分钟后就会退出,就需要再次复制 token,而每次都复制 token 显得非常麻烦,所以我们可以自己创建 kubeconfig 文件,放置在桌面上,这样用起来比较方便

# 将认证信息复制并重命名

[root@K8s-master1 ~]# cp /root/.kube/config /opt/kubeconfig

# 编辑文件,将上面的 token 加入到认证文件中

[root@K8s-master1 ~]# vim /opt/kubeconfig

将 kubeconfig 放到电脑桌面,就可以使用 kubeconfig

4 测试运行 Nginx + Tomcat

https://kubernetes.io/zh/docs/concepts/workloads/controllers/deployment/

测试运行 Nginx,并最终可以将实现动静分离

4.1 运行 Nginx

[root@K8s-master1 ~/kubeadm-yaml]# cat /root/kubeadm-yaml/nginx.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

namespace: default

name: nginx-deployment

labels:

app: nginx

spec:

replicas: 1

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: harbor.tech.com/baseimages/nginx:1.18.0

ports:

- containerPort: 80

---

kind: Service

apiVersion: v1

metadata:

labels:

app: test-nginx-service-label

name: test-nginx-service

namespace: default

spec:

type: NodePort

ports:

- name: http

port: 80

protocol: TCP

targetPort: 80

nodePort: 30004

selector:

app: nginx

[root@K8s-master1 ~/kubeadm-yaml]#

运行 nginx.yaml

[root@K8s-master1 ~/kubeadm-yaml]# pwd

/root/kubeadm-yaml

[root@K8s-master1 ~/kubeadm-yaml]# kubectl apply -f nginx.yaml

deployment.apps/nginx-deployment created

service/test-nginx-service created

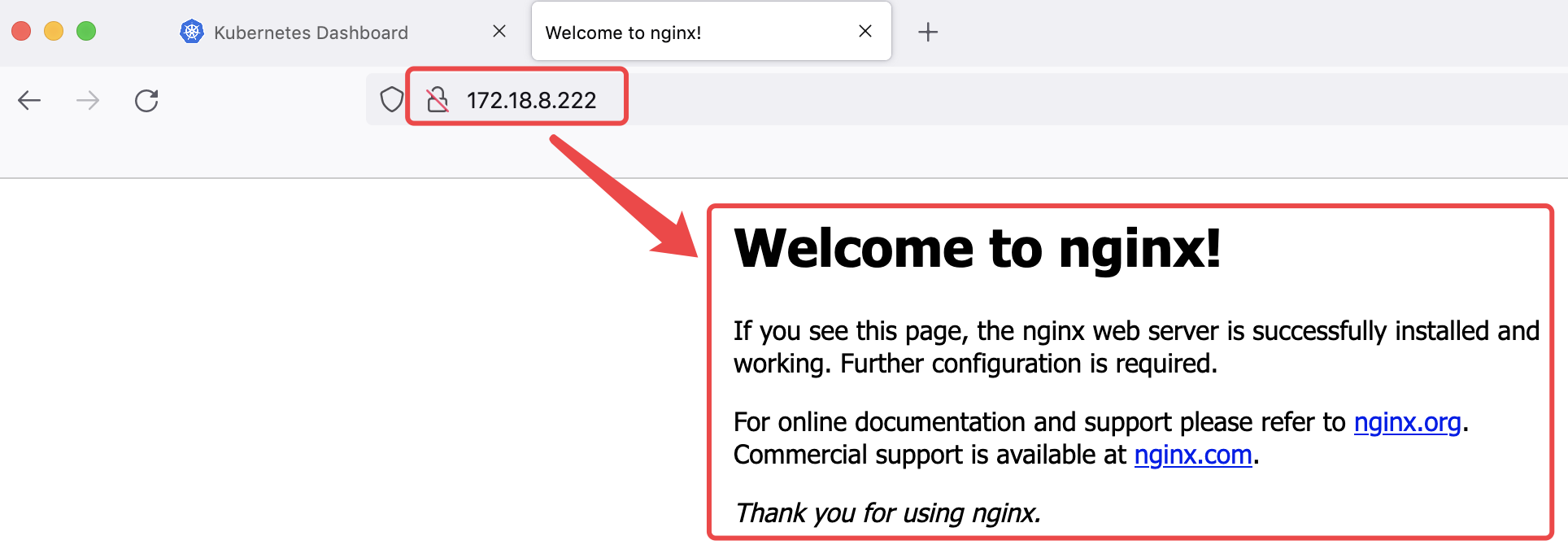

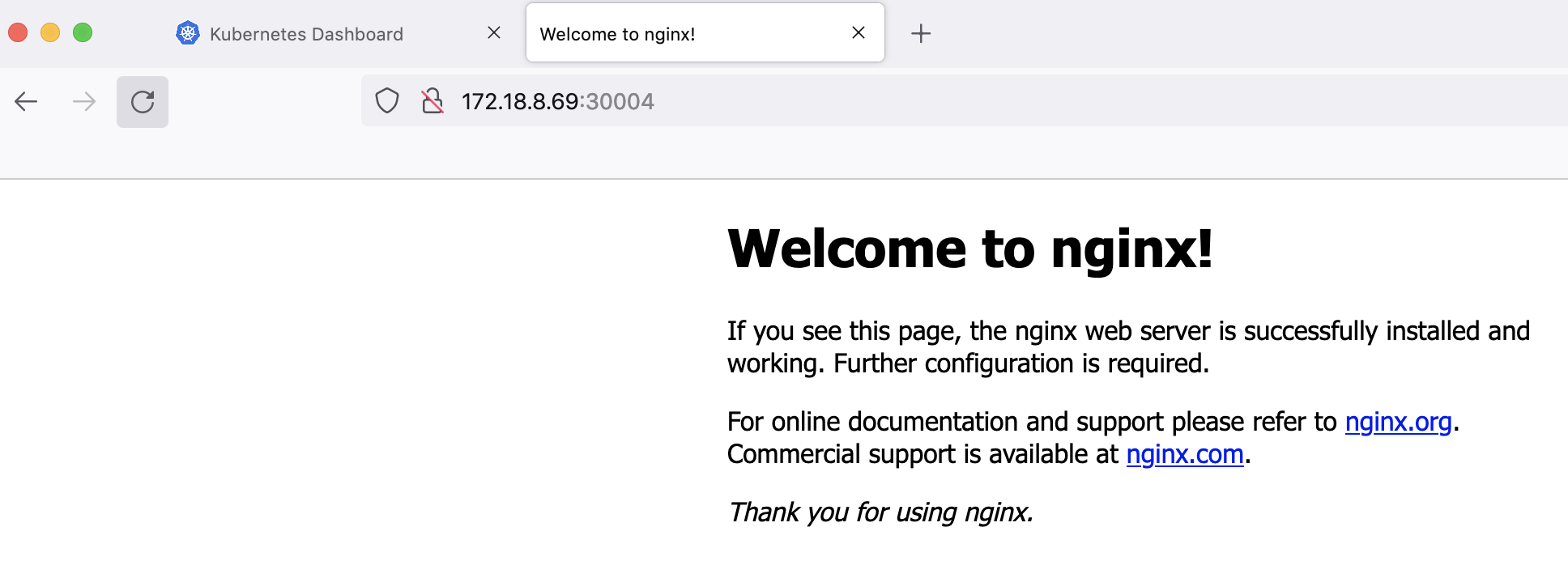

访问测试

# 任意 node 的IP + Port(30004)

172.18.8.69:30004

4.2 运行 tomcat

[root@K8s-master1 ~/kubeadm-yaml]# cat /root/kubeadm-yaml/tomcat.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

namespace: default

name: tomcat-deployment

labels:

app: tomcat

spec:

replicas: 1

selector:

matchLabels:

app: tomcat

template:

metadata:

labels:

app: tomcat

spec:

containers:

- name: tomcat

image: harbor.tech.com/baseimages/tomcat-base:v8.5.45

ports:

- containerPort: 8080

---

kind: Service

apiVersion: v1

metadata:

labels:

app: test-tomcat-service-label

name: test-tomcat-service

namespace: default

spec:

# type: NodePort

ports:

- name: http

port: 80

protocol: TCP

targetPort: 8080

# nodePort: 30005

selector:

app: tomcat

[root@K8s-master1 ~/kubeadm-yaml]#

运行 tomcat yml 文件

[root@K8s-master1 ~/kubeadm-yaml]# pwd

/root/kubeadm-yaml

[root@K8s-master1 ~/kubeadm-yaml]# kubectl apply -f tomcat.yaml

deployment.apps/tomcat-deployment created

service/test-tomcat-service created

[root@K8s-master1 ~/kubeadm-yaml]#

[root@K8s-master1 ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

net-test1 1/1 Running 0 125m

net-test2 1/1 Running 0 125m

nginx-deployment-67dfd6c8f9-q8s72 1/1 Running 0 49m

tomcat-deployment-6c44f58b47-bcwdr 1/1 Running 0 48m

[root@K8s-master1 ~]#

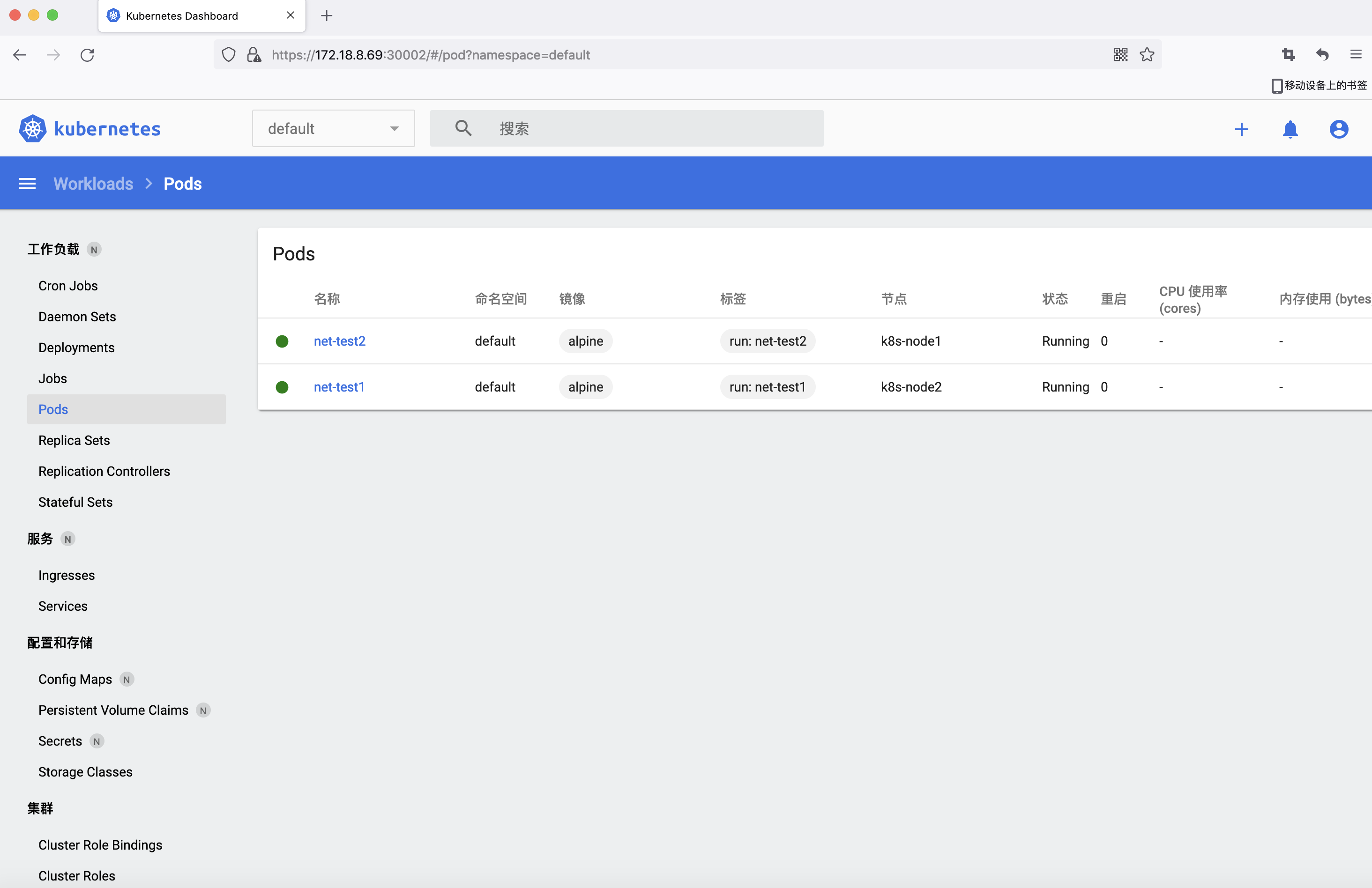

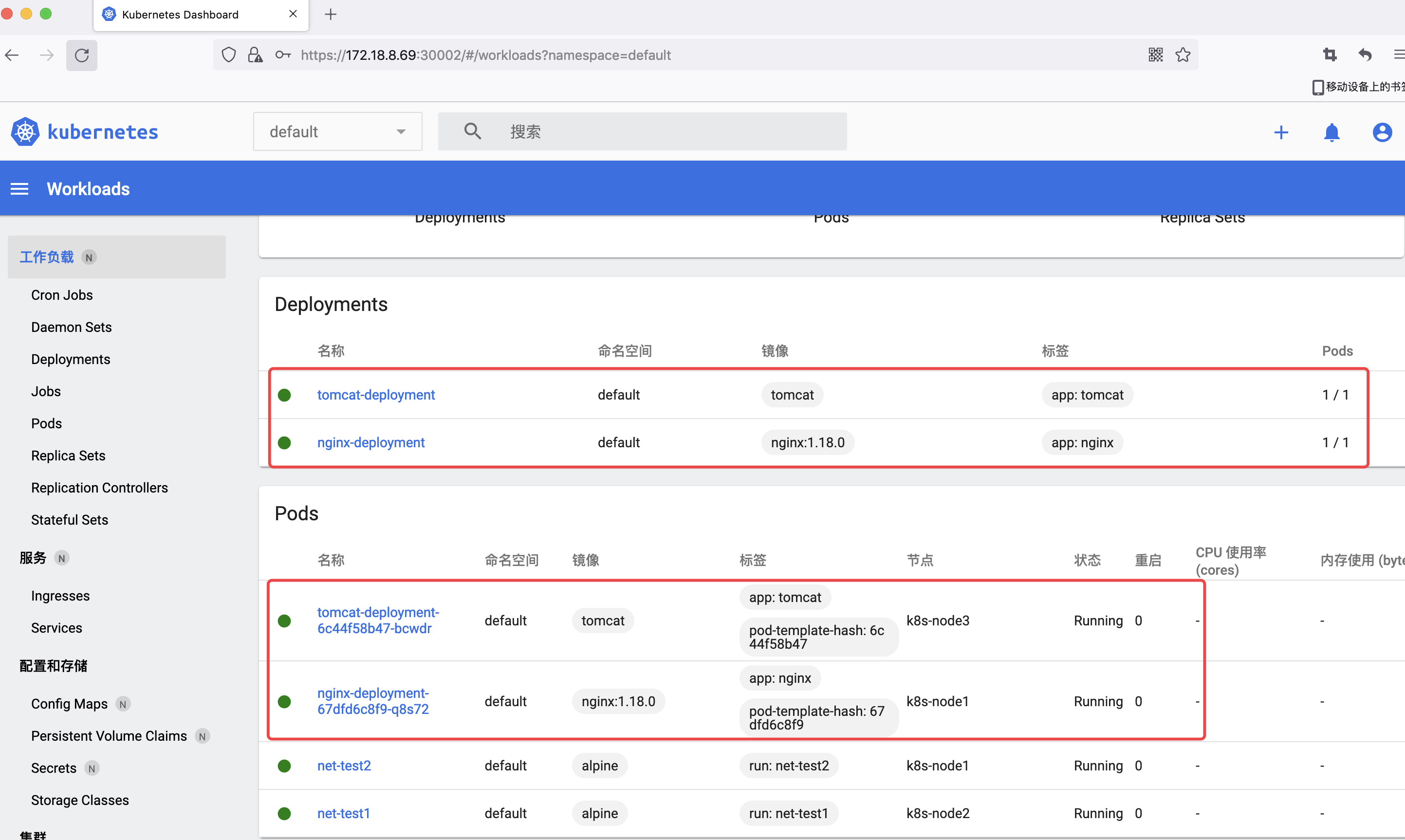

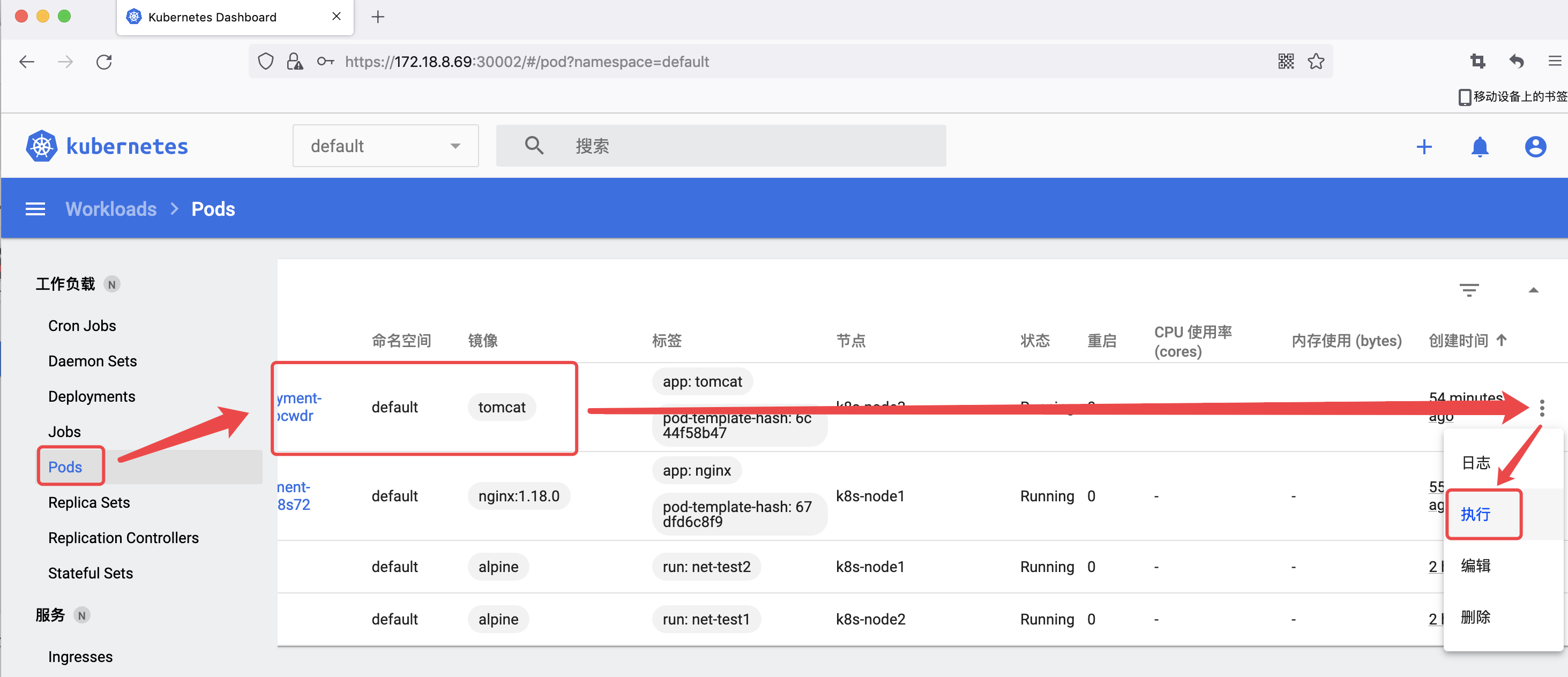

4.3 dashboard 验证 pod

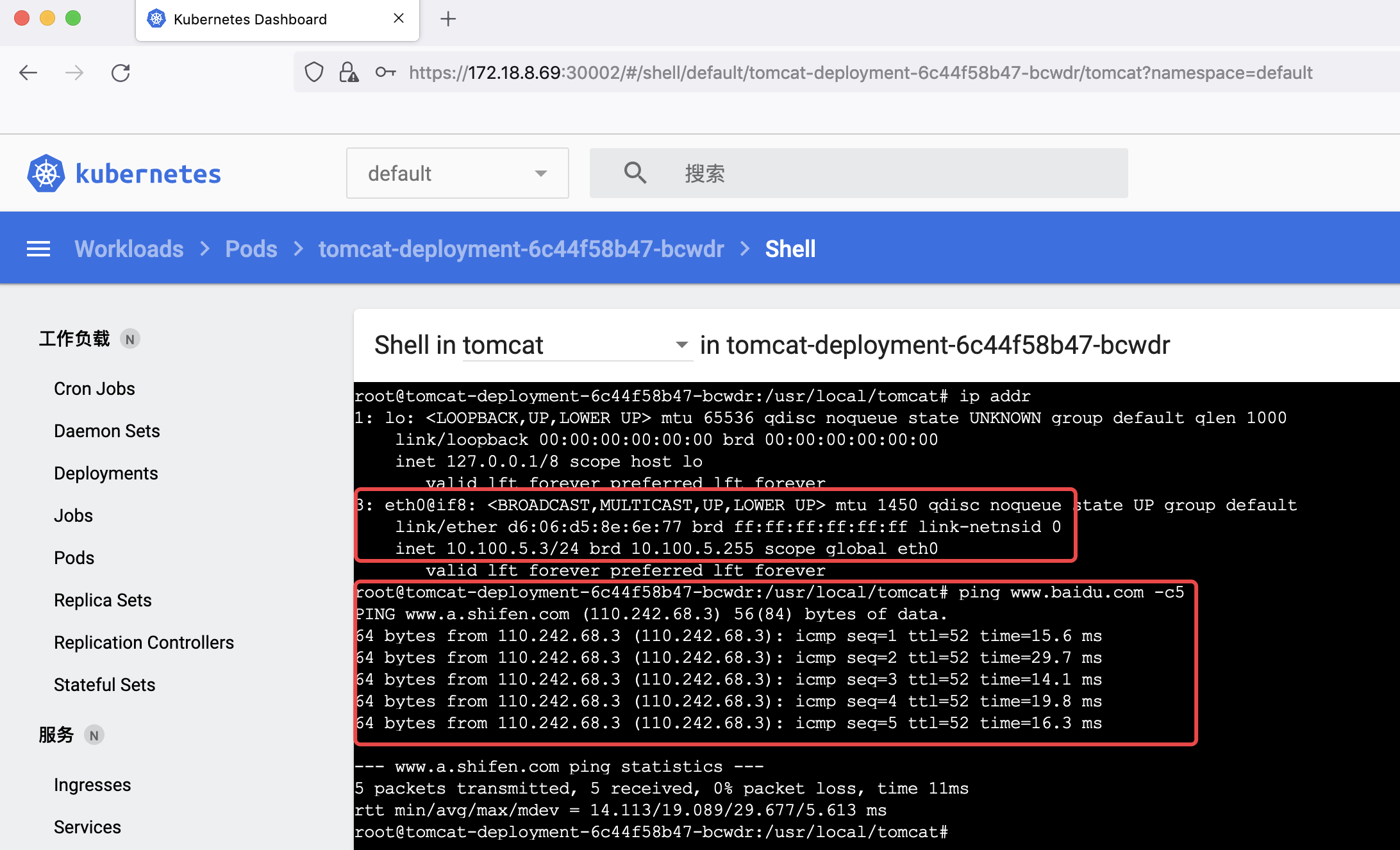

4.4 从 dashboard 进入容器

验证 pod 通信

4.5 进入 tomcat pod 生成 app

4.5.1 生成 app

[root@K8s-master1 ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

net-test1 1/1 Running 0 2d21h

net-test2 1/1 Running 0 2d21h

nginx-deployment-67dfd6c8f9-q8s72 1/1 Running 0 2d20h

tomcat-deployment-6c44f58b47-f8tk4 1/1 Running 0 6h1m

[root@K8s-master1 ~]# kubectl describe pod tomcat-deployment-6c44f58b47-f8tk4

[root@K8s-master1 ~]# kubectl exec -it tomcat-deployment-6c44f58b47-f8tk4 bash

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

root@tomcat-deployment-6c44f58b47-f8tk4:/usr/local/tomcat# cd webapps

root@tomcat-deployment-6c44f58b47-f8tk4:/usr/local/tomcat/webapps# mkdir tomcat

root@tomcat-deployment-6c44f58b47-f8tk4:/usr/local/tomcat/webapps# echo "<h1>Tomcat test page for pod</h1>" > tomcat/index.jsp

root@tomcat-deployment-6c44f58b47-f8tk4:/usr/local/tomcat/webapps#

4.6 Nginx 实现动静分离

4.6.1 Nginx 配置

[root@K8s-master1 ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

net-test1 1/1 Running 0 2d21h

net-test2 1/1 Running 0 2d21h

nginx-deployment-67dfd6c8f9-q8s72 1/1 Running 0 2d20h

tomcat-deployment-6c44f58b47-f8tk4 1/1 Running 0 6h4m

[root@K8s-master1 ~]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.200.0.1 <none> 443/TCP 3d1h

test-nginx-service NodePort 10.200.150.186 <none> 80:30004/TCP 2d20h

test-tomcat-service ClusterIP 10.200.83.131 <none> 80/TCP 2d20h

[root@K8s-master1 ~]#

# 进入到 Nginx pod

kubectl exec -it nginx-deployment-67dfd6c8f9-q8s72 bash

cat /etc/issue

# 更新软件源并安装基础命令

apt update

apt install procps vim iputils-ping net-tools curl

# 测试在 nginx Pod 通过 tomcat pod 的 service 域名访问

[root@K8s-master1 ~]# kubectl exec -it nginx-deployment-67dfd6c8f9-q8s72 bash

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

root@nginx-deployment-67dfd6c8f9-q8s72:/# ping test-tomcat-service # 测试 service 解析

PING test-tomcat-service.default.svc.song.local (10.200.83.131) 56(84) bytes of data.

^C

--- test-tomcat-service.default.svc.song.local ping statistics ---

35 packets transmitted, 0 received, 100% packet loss, time 854ms

root@nginx-deployment-67dfd6c8f9-q8s72:/# curl test-tomcat-service.default.svc.song.local/tomcat/index.jsp

<h1>Tomcat test page for pod</h1>

root@nginx-deployment-67dfd6c8f9-q8s72:/#

# 修改 Nginx 配置文件实现动静分离,Nginx 一旦接受到 /tomcat 的 uri 就转发给 tomcat

root@nginx-deployment-67dfd6c8f9-q8s72:/# vim /etc/nginx/conf.d/default.conf

location /tomcat {

proxy_pass http://test-tomcat-service.default.svc.song.local;

}

# 测试 nginx 配置文件

root@nginx-deployment-67dfd6c8f9-q8s72:/# nginx -t

nginx: the configuration file /etc/nginx/nginx.conf syntax is ok

nginx: configuration file /etc/nginx/nginx.conf test is successful

# 重新加载配置文件

root@nginx-deployment-67dfd6c8f9-q8s72:/# nginx -s reload

2021/07/27 08:29:30 [notice] 602#602: signal process started

root@nginx-deployment-67dfd6c8f9-q8s72:/#

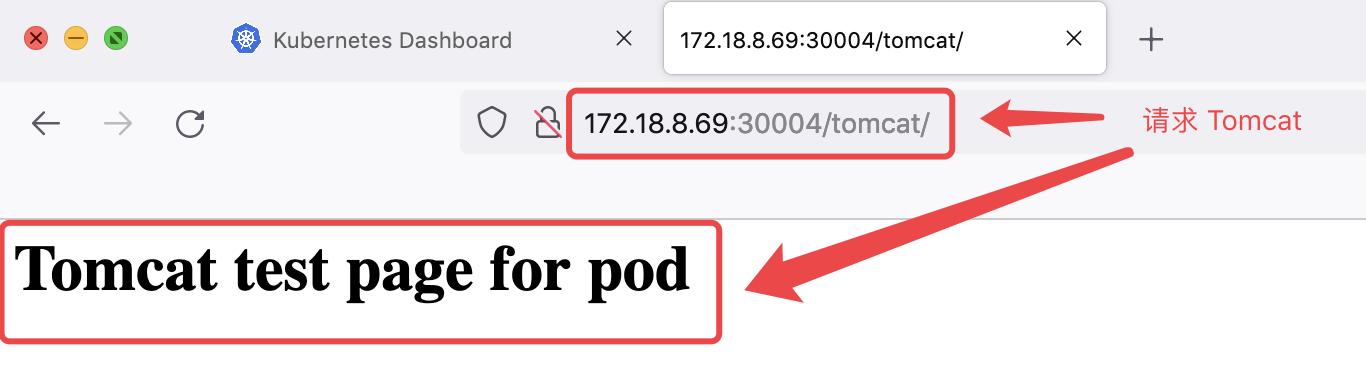

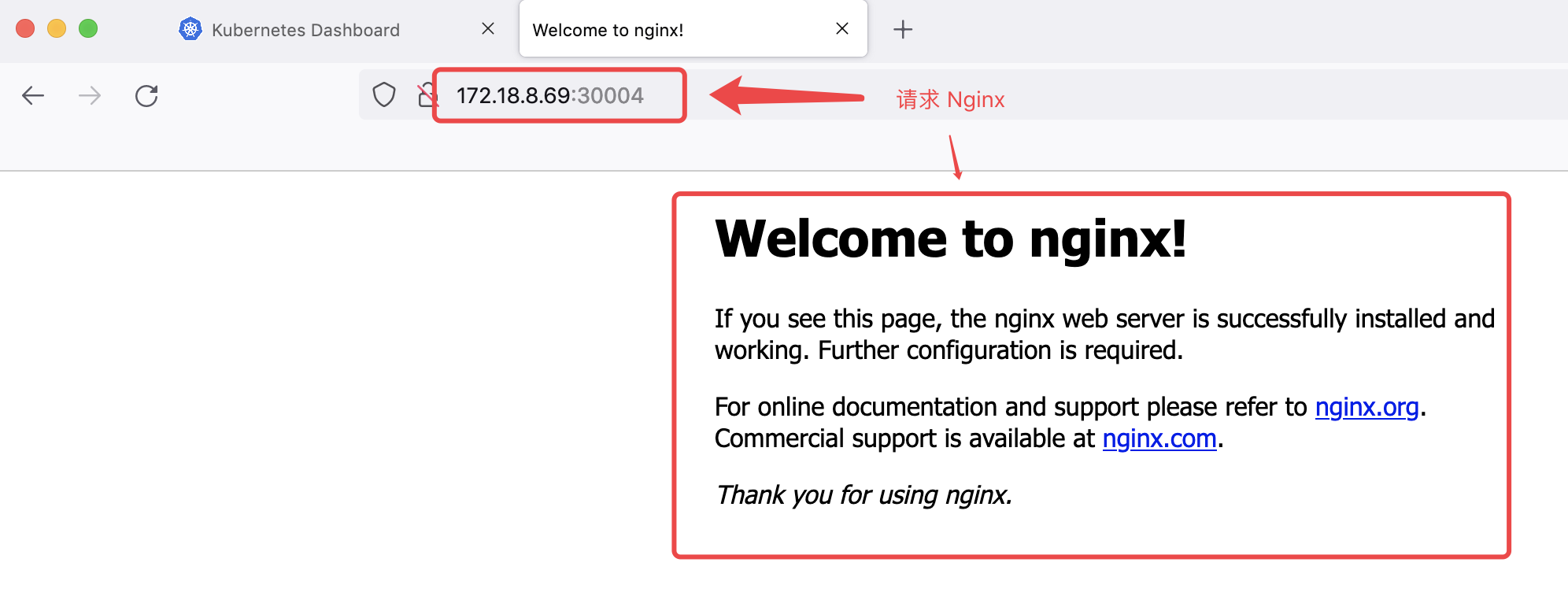

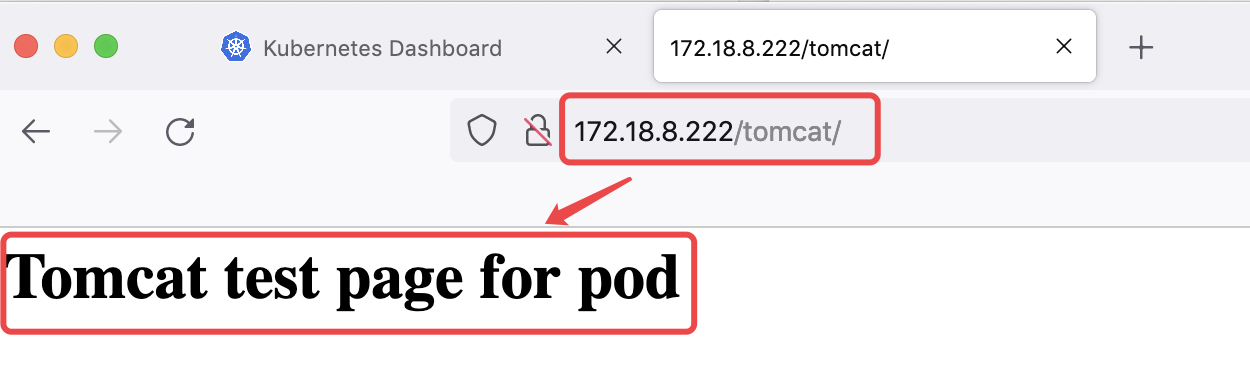

4.6.2 测试访问 web 页面

172.18.8.69:30004/tomcat/

172.18.8.69:30004

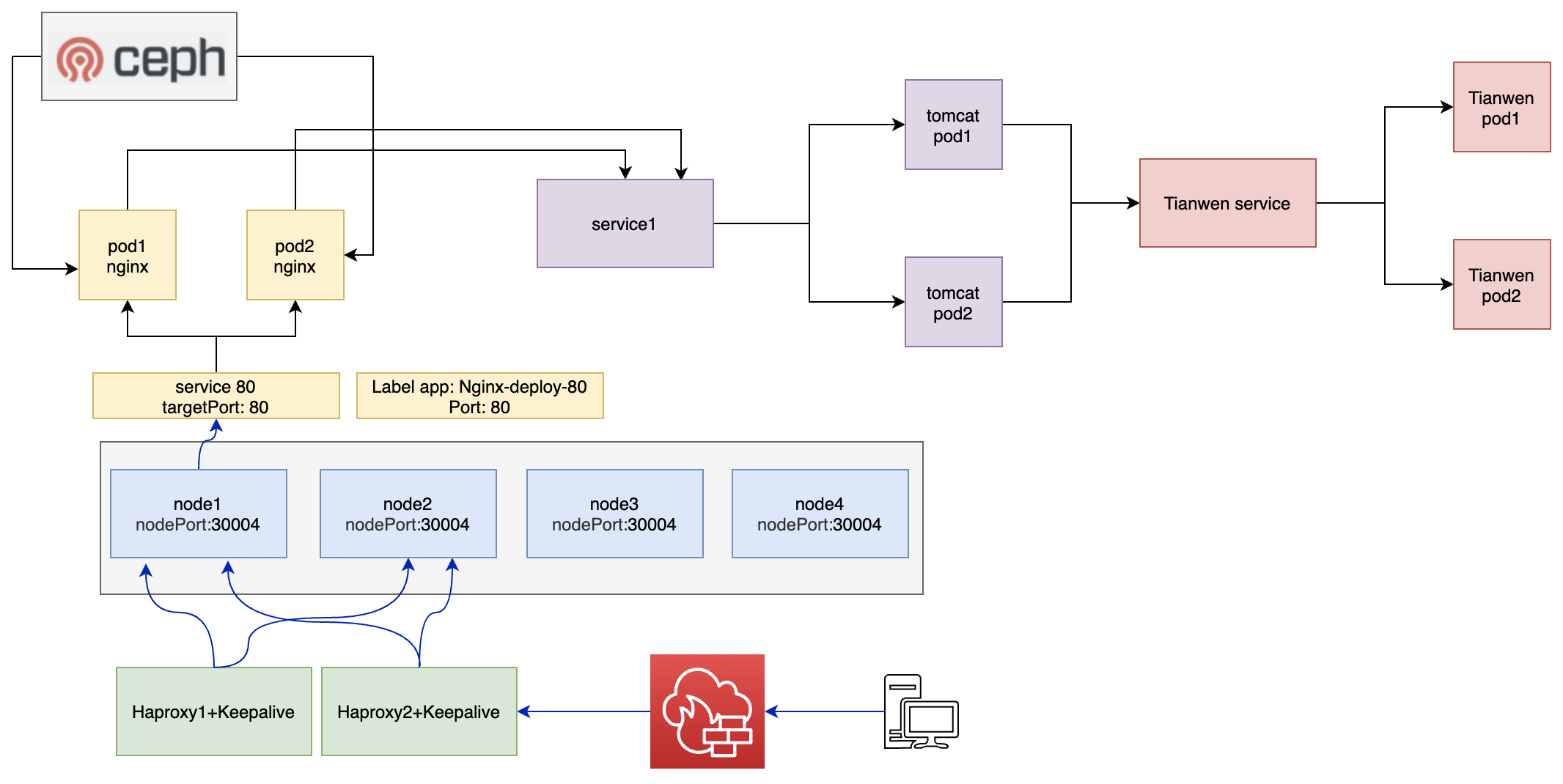

4.7 通过 Haproxy 实现高可用反向代理

基于 Haproxy 和 keepalived 实现高可用的反向代理,并访问到运行在 Kubernetes 集群中业务 Pod,反向代理可以复用 K8s 的反向代理环境,生产环境需要配置单独的反向代理服务器

4.7.1 keepalived VIP 配置

为 k8s 中的服务配置单独的 VIP

vrrp_instance VI_1 {

state MASTER

interface eth0

garp_master_delay 10

smtp_alert

virtual_router_id 51

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

172.18.8.111/24 dev eth0 label eth0:1 # haproxy:bind 172.18.8.111:6443

172.18.8.222/24 dev eth0 label eth0:2 # haproxy:bind 172.18.8.222:80

}

}

systectl restart keepalived

4.7.2 Haproxy 配置

listen k8s-6443

bind 172.18.8.111:6443

mode tcp

balance roundrobin

server 172.18.8.9 172.18.8.9:6443 check inter 3s fall 3 rise 5 # k8s-master1

server 172.18.8.19 172.18.8.19:6443 check inter 3s fall 3 rise 5 # k8s-master2

server 172.18.8.29 172.18.8.29:6443 check inter 3s fall 3 rise 5 # k8s-master3

listen nginx-80

bind 172.18.8.222:80

mode tcp

balance roundrobin

server 172.18.8.39 172.18.8.39:30004 check inter 3s fall 3 rise 5 # k8s-node1

server 172.18.8.49 172.18.8.49:30004 check inter 3s fall 3 rise 5 # k8s-node2

server 172.18.8.59 172.18.8.59:30004 check inter 3s fall 3 rise 5 # k8s-node3

server 172.18.8.69 172.18.8.69:30004 check inter 3s fall 3 rise 5 # k8s-node4

4.7.3 测试通过 VIP 访问

172.18.8.222/tomcat

172.18.8.222